Mon, Dec 20, 2021

Go 1.18 is going to be released with generics support. Adding generics to Go was a multi-year effort and was a difficult one. Go type system is not a traditional type system and it was not possible just to bring an existing generics implementation from other language and be done. The current proposal was accepted after years of user research, experiments and discussions. The proposal got iterated a few times during the implementation phase. I found the final result delightful.

The proposal notes several limitations of the current implementation. When I was first reviewing the proposal a year ago, one limitation stood out the most: no parameterized methods. As someone who has been maintaining various database clients for Go, the limitation initially sounded as a major compromise. I spent a week redesigning our clients based on the new proposal but felt unsatisfied. If you are coming from another language to Go, you might be familiar with the following API pattern:

// NOTE: NOT POSSIBLE TO COMPILE THIS CODE AT THE MOMENT.

db, err := database.Connect("....")

if err != nil {

log.Fatal(err)

}

all, err := db.All[Person](ctx) // Reads all person entities

if err != nil {

log.Fatal(err)

}

Once you open a connection, you can share among entity types by writing generic

methods that have access to the connection. We often see this pattern implemented as generic methods, and not being able to compile the snippet above felt unideal to achieve a similar result.

Being able to write generic method bodies in cases like above are useful for framework

developers to handle boilerplate and common tasks in the framework while keeping

the implementation generic from entity types.

Facilitators

If you are looking into the Go generics for the first time, it may not be

immediately clear at first that the language allows to have parameterized receivers. Parameterized receivers

are a useful tool and helped me develop a common pattern, facilitators, to overcome the shortcomings of having no parameterized methods. Facilitators are simply

a new type that has access to the type you wished you had generic methods on. For example, if you are an ORM framework designer and want to provide

several methods of querying a table, you introduce an intermediate type, Querier, and do the wiring to Client via NewQuerier. Then, Querier allows you to write generic querying functions against types provided by your users. I found it useful to keep the facilitators in the same package to have full access to unexported fields but it’s

not necessarily.

package database

type Client struct{ ... }

type Querier[T any] struct {

client *Client

}

func NewQuerier[T any](c *Client) *Querier[T] {

return &Querier[T]{

client: c,

}

}

func (q *Querier[T]) All(ctx context.Context) ([]T, error) {

// implementation

}

func (q *Querier[T]) Filter(ctx context.Context, filter ...Filter) ([]T, error) {

// implementation

}

Later, your users can crete a new querier of any entity type and use

the existing client connection to query the database:

var client *database.Client // initiate

querier := database.NewQuerier[Person](client)

all, err := querier.All(ctx)

if err != nil {

log.Fatal(err)

}

for _, person := range all {

log.Println(person)

}

Facilitators make the limitation of having no generic methods disappear and

they add only a tiny bit of verbosity.There is nothing novel about this pattern but it needs a name for those who don’t know how to use parameterized receivers to achieve the same.

A playground is available in case you want to try it out.

Fri, Dec 3, 2021

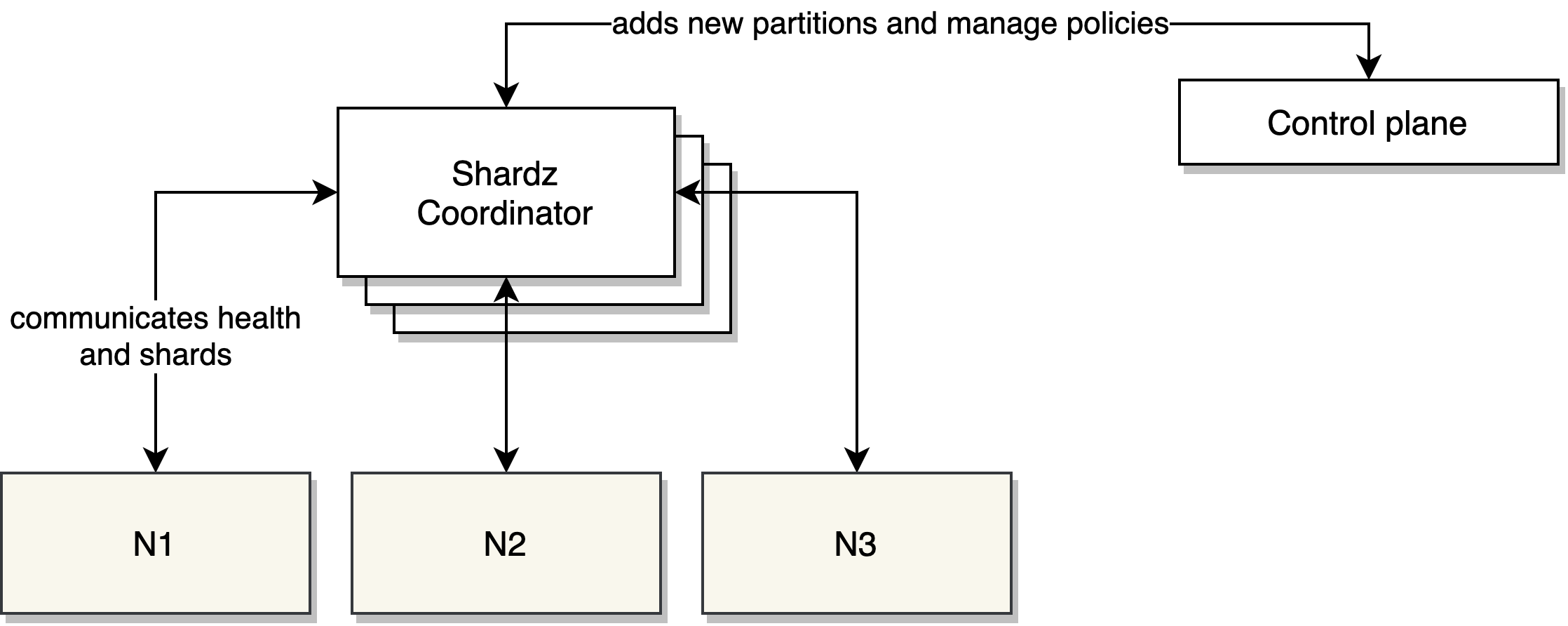

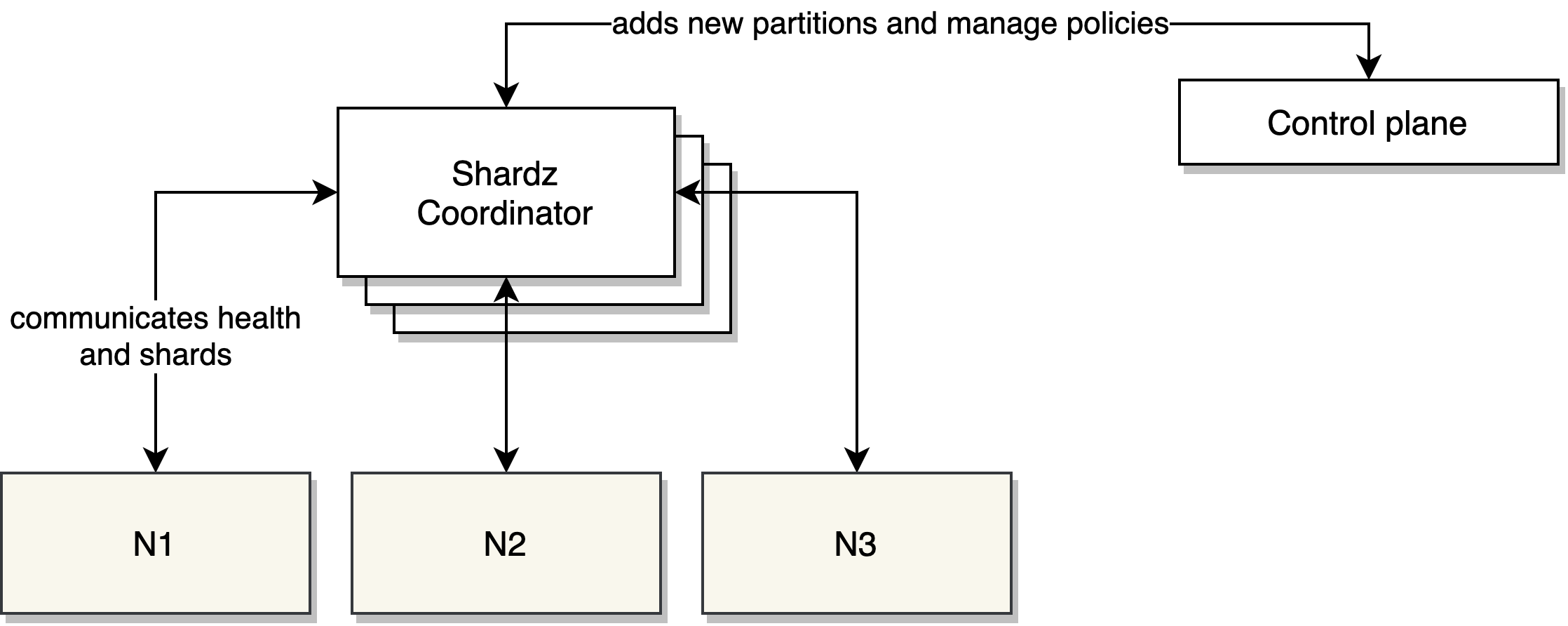

Shard coordination has been one of the bigger challenges to design

sharded systems especially for engineers with little experience in the subject.

Companies like Facebook have been using general purpose shard coordinators,

e.g. Shard Manager, and suggesting that general

purpose sharding policies have been widely successful. A general

purpose sharding coordinator is not a solution to all advanced sharding needs,

but a starting point for average use cases.

It’s a framework to think about core abstractions

in sharded systems and providing a protocol that orchestrate sharding

decisions. In the last few weeks, I’ve been working on an extendible general purpose

shard coordinator, Shardz. In this article, I will explain

the main concepts and the future work.

Sharding

Sharding is a concept of sharding load to different nodes in a cluster

to horizontally scale systems. We can talk about two commonly used approaches

to sharding strategies.

Hashing

Hashing is used to map an arbitrary key to a node in a cluster. Keys and

hash functions are chosen carefully for load balancing to be effective.

With this approach, each incoming key is hashed and its modulo is

calculated to associate the key with one of the available nodes. Then,

the request is routed to that node to be served.

hash_function(key) % len(nodes)

This approach, even though its shortcomings, offer an easy to implement way

of designating a location to the incoming key. Consistent hashing can improve

the excessive migration of shards upon node failure or addition.

This approach is still commonly used in databases,

key/value caches, and web/RPC servers.

One of the significant shortcomings of hashing is that the destination node is determined

when handling an incoming request. The nodes in the system don’t know which

shards they are serving in advance unless they can calculate the key ranges they need to serve. This situation makes

it hard for nodes to preload data or it makes it harder to implement

strict replication policies. The other difficulty is that existing shards

cannot be easily visualized for debugging purposes unless you can query

where key ranges live.

Lookup tables

An alternative to hashing is to generate lookup tables. In this approach,

you keep track of available nodes and partitions you want to serve. Lookup

tables are regenerated each time a node or a partition is added or removed,

and is the source of the truth which node a partition should be served at.

Lookup tables can also help enforcing replication policies, e.g. ensure there

are at least two replicas being served at different nodes.

Lookup tables are generally easy to implement when you have homogenous nodes.

In heterogenous cases, node capacity can be used to

influence the distribution of partitions.

Shardz chose to only tackle the homogenous nodes for now but it’s trivial to

advance the coordinator to consider nodes with different capacity in the future.

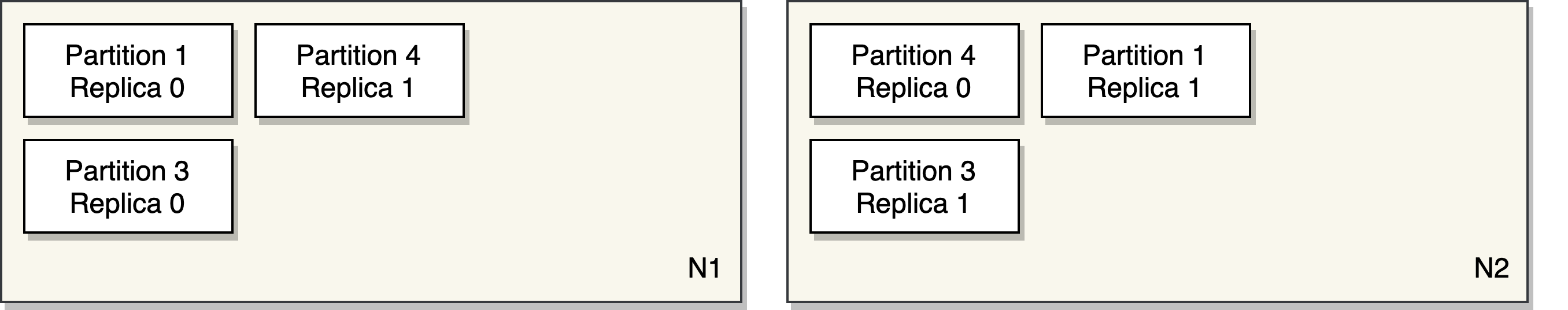

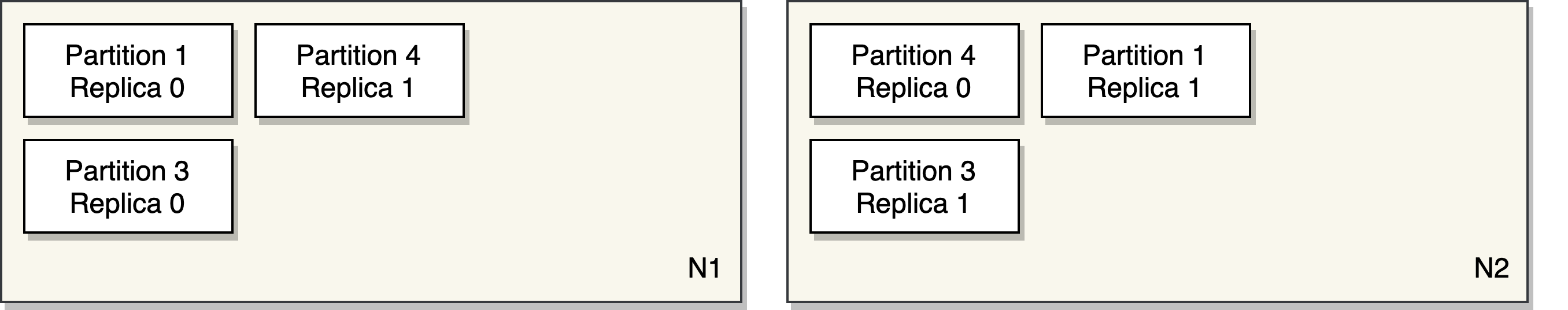

P x N

Sharding with lookup tables is essentially a P x N problem where

you have P partitions and N nodes. The goal is to distribute P

partitions on the available nodes at any time. Most systems require

replication for fault tolerance, so we decided to identify a P with

a unique identifier and its replica number. At any times, a P should be

replicated on Ns based on how many replicas are required.

type P struct { ID string, Replica int }

type N struct { Host string }

We expect users to manage partitions separately and talk to the Shardz

coordinator to schedule them on the available nodes. The partitions

should be uniquely identified within the same coordinator.

Sharder

A core concept in Shardz is the Sharder interface. A sharder allows

users to register/unregister Ps and Ns. Then, users can query where

a partition is being served. Sharder interface can be implemented with

hashing or lookup tables. We don’t enforce any implementation details

but expect the following interface to be satisfied.

type Sharder interface {

RegisterNodes(n ...N) error

UnregisterNodes(n ...N) error

RegisterPartitions(ids ...string) error

UnregisterPartitions(ids ...string) error

Partitions(n N) ([]P, error)

Nodes(partitionID string) ([]N, error)

}

Sharders can be extended to satisfy custom needs such as implementing

custom replication policies, scheduling replicas in different availability

zones, finding the correct VM type/size to schedule the partition.

Work stealing replica sharder

Shardz comes with general use Sharder implementations. The default sharder

is a work stealing sharder that enforce a minimum number of replicas for

each partition. ReplicaSharder implements a trivial approach to find

the next available node by looking at the least overloaded node that

doesn’t already serve a replica of the partition. If this approach fails,

we will look for the first node that doesn’t already serve a replica of the partition.

If everything fails, we pick a random node and schedule the partition on it.

func nextNode(p P) {

// Find the node with the lowest load.

// If the node is not already serving p.ID, return the node.

// Find all the available nodes.

// Randomly loop for a while until you find a node

// that doesn't serve p.ID (a replica).

// Return the node if something is found in acceptable number of iterations.

// Randomly pick a node and return.

}

Additional to the partition positioning, work stealing is triggered when

a new node joins or a partition is unregistered. Work stealing can be triggered

as many as times possible, e.g. routinely to avoid unbalanced load on nodes.

func stealWork() {

// Calculate the average load on nodes.

// Find nodes with 1.3x more load than average.

// Calculate how many partitions need to be removed

// from the overloaded node. Remove the partitions.

// For each removed partition, call nextNode to find

// a new node to serve the partition.

}

Protocol

Shardz have been heavily influenced by Shard Manager when it comes to making it easier for servers to report their status and coordinate with the manager.

A worker node creates a server that implements the protocol. ServeFn and

DeleteFn are the hooks when coordinator notifies the worker which partitions it should

serve or stop serving.

import "shardz"

server, err := shardz.NewServer(shardz.ServerConfig{

Manager: "shardz.coordinator.local:7557", // shardz coordinator endpoint

Node: "worker-node1.local:9090",

ServeFn: func(partition string) {

// Do work to serve partition.

},

DeleteFn: func(partition string) {

// Do work to stop serving partition.

},

})

if err != nil {

log.Fatal(err)

}

http.HandleFunc("/shardz", server.Handler)

log.Fatal(http.ListenAndServe(listen, nil))

At startup, worker node automatically pings the coordinator to register itself.

Then Shardz coordinator will ping the worker back periodically to check its status.

The partitions that need to be served by the worker is periodically distributed

and the ServeFn and DeleteFn functions are triggered automatically if

partitions changed.

At a graceful shutdown, worker node automatically reports that it’s going

away and give the coordinator a change to redistribute its partitions.

Fault tolerance

Shardz is designed to run in a clustered mode where there will

be multiple replicas of the coordinator at any time. The coordinator will have

a single leader that is responsible for sharding decision and propagating

them to others. If leader goes away, another replica becomes the leader.

ZooKeeper is used to coordinate the Shardz coordinators.

Future work

Shardz is still in the early stages but it’s a promising concept

to build a reusable multi purpose sharding coordinator. It has potential

to lower to entry barrier to design sharded systems. The next steps

for the project:

- Fault tolerance. The coordinator still has work to be finished when running

in the cluster mode.

- New Sharder implementations. Project aims to provide multiple

implementation with different policies to meet the needs. I desire to open

source this project to allow community to contribute.

- Language support for protocol implementation. We only have support

for Go and should expand the server implementation to capture more languages

to make it easy just to import a library to add Shardz support to any process.

- Visualization and management tools. Dashboards and custom control planes

to monitor, debug and manage shards.

- An ecosystem that speaks Shardz. It’s an ambitious goal but a unified

control plane for shard management would benefit the entire industry.

Wed, Jul 22, 2020

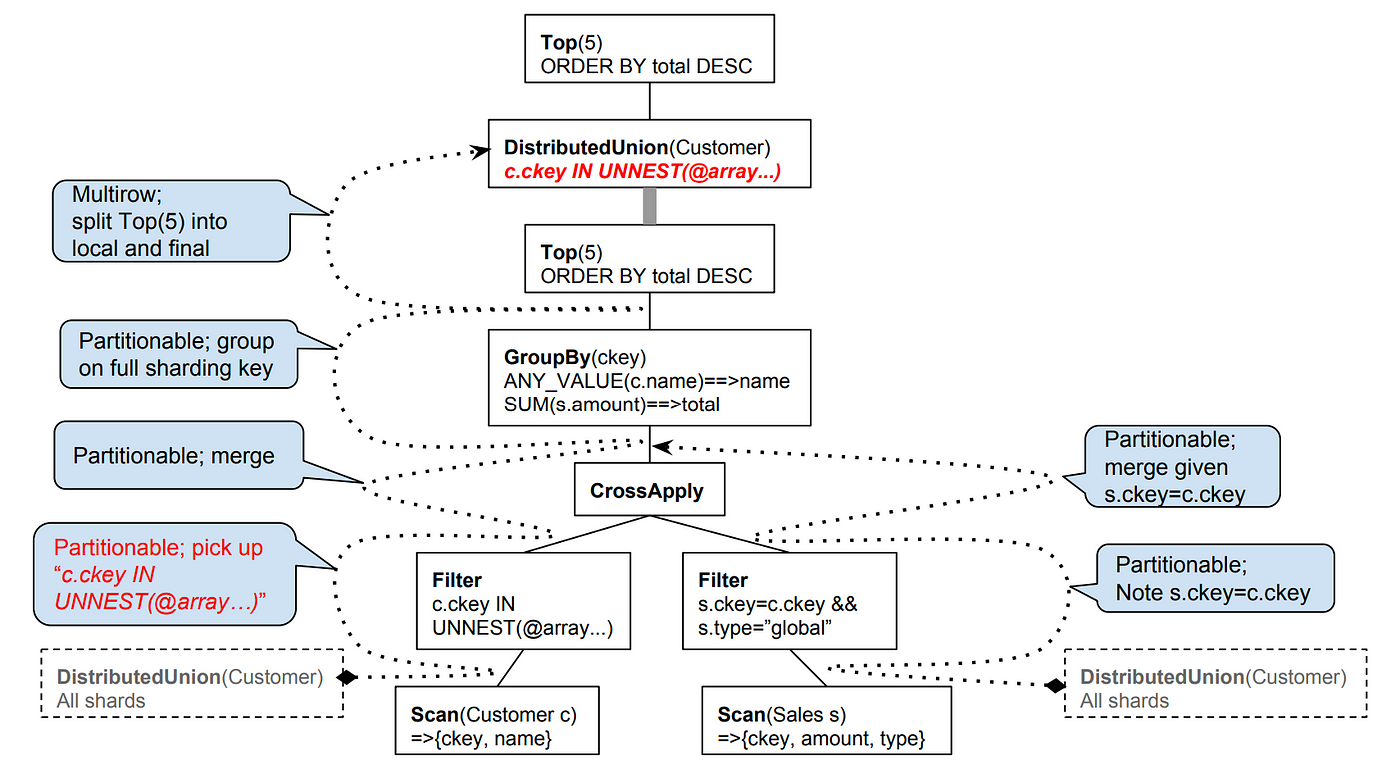

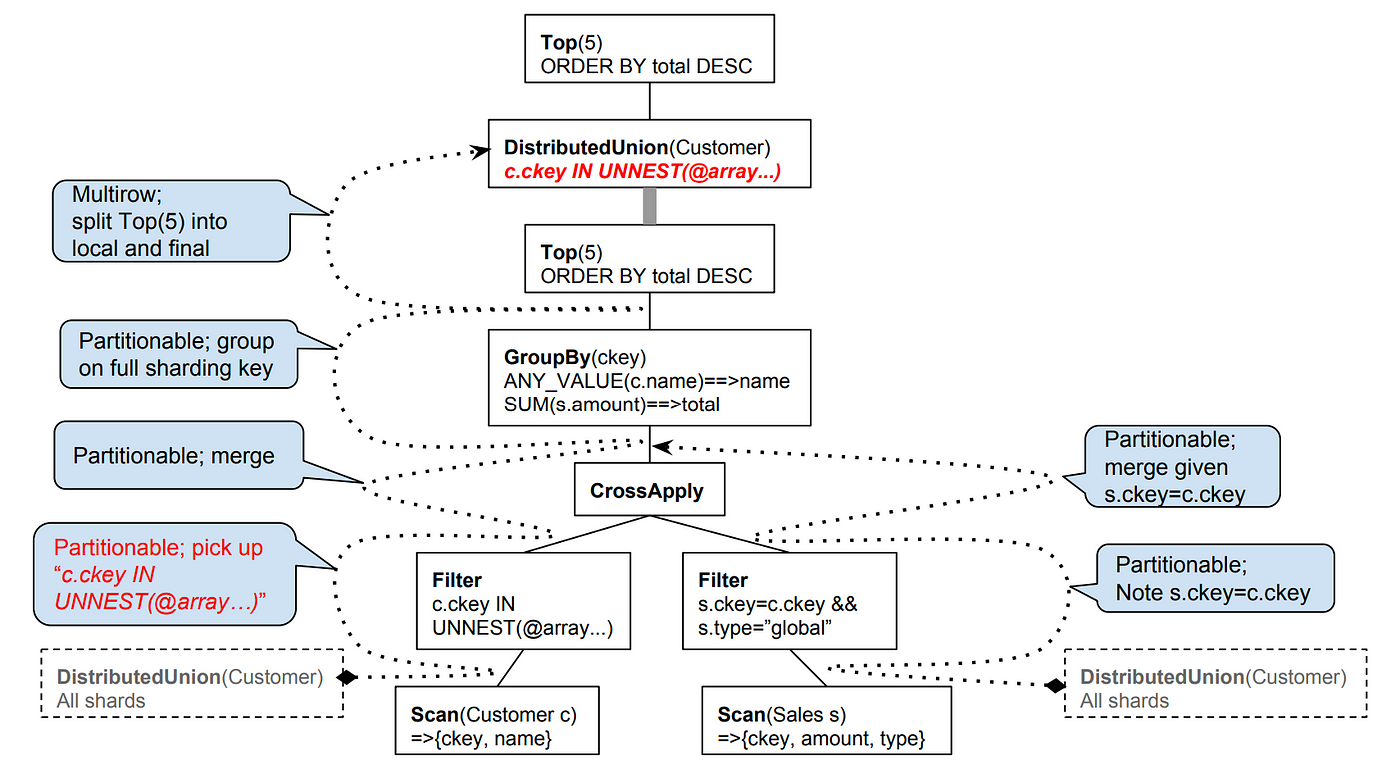

Spanner is a distributed database Google initiated a while ago to build a highly available and highly consistent database for its own workloads. Spanner was initially built to be a key/value and was in a completely different shape than it is today and it had different goals. Since the beginning, it had transactional capabilities, external consistency and was able to failover transparently. Over time, Spanner adopted a strongly typed schema and some other relational database features. In the last years, it added SQL support*. Today we are improving both the SQL dialect and the relational database features simultaneously. Sometimes there is confusion whether Spanner supports SQL or not. The short answer is yes. The long answer is this article.

Early years

Spanner was initially built for internal workloads and no one was able to see Google is going to initiate a cloud business and externalize Spanner. If you don’t know much about Google, our internal stack is often considered as a different universe. At Google, all systems including storage and database services provide their own proprietary APIs and clients. Are you planning to use your favorite ORM library when you join to Google? Unfortunately, it’s not possible. Our infrastructure services provide their own Stubby/gRPC APIs and client libraries. This is a minor disadvantage if you care about API familiarity but it’s a strong differentiator because we can provide more expressive APIs that represent the differentiated features of our infrastructure.

Differentiated features are often poorly represented in common APIs. One size doesn’t fit all. Common APIs can only target the common features. Distributed databases are already vastly different than traditional relational databases. I’ll give two examples in this article to show how explicit APIs make a difference.

Distributed systems fail differently and more often. In order to deal with this problem, distributed systems implement retrying mechanisms. Spanner clients transparently retries transactions when we fail to commit them. This allows us not to surface every temporary failure to the user. We transparently retry with the right backoff strategy.

In the following snippet, you see some Go code starting a new read-write transaction. It takes a function where you can query and manipulate data. When there is an abort or conflict, it retries the function automatically. ReadWriteTransaction documents this behavior and documents that the function should be safe to retry (e.g. telling the developers don’t hold application state). This allows us to communicate the unique reality of distributed databases to the user. We can also provide capabilities like auto-retries which are harder to implement in traditional ORMs.

import "cloud.google.com/go/spanner"

_, err := client.ReadWriteTransaction(ctx, func(ctx context.Context, txn *spanner.ReadWriteTransaction) error {

// User code here.

})

Another example is the isolation levels. Spanner implements an isolation level better than the strictest isolation level (serializable) described in the SQL standard. Spanner doesn’t allow you to pick anything less strict for read/write transactions. But for multi-regional setups and read-only transactions, providing the strongest isolation is not always feasible. Our limits are tested by the speed of the light. For users who are ok with slightly stale data, Spanner has capabilities to provide stale reads. Stale reads allow users to read the version available in the regional replica. They can set how much staleness they can tolerate. For example, the transaction below can tolerate up to 10 seconds.

import "cloud.google.com/go/spanner"

client.ReadOnlyTransaction().

WithTimestampBound(spanner.MaxStaleness(10*time.Second)).

Query(ctx, query)

Staleness API allows us to explicitly express how snapshot isolation works and how Spanner can go and fetch the latest data if replica is very out of date. It also allows us to highlight how multi-regional replication is a hard problem and even with a database like Spanner, you may consider compromising from consistency for better latency characteristics in a multi-regional setup.

F1

F1 was the original experiment for the first steps towards having SQL support in Spanner. F1 is a distributed database at Google that is built on top of Spanner. Unlike Spanner, it supported:

- Distributed SQL queries

- Transactionally consistent secondary indexes

- Change history and stream

It implemented these features in a coordination layer on top of Spanner and handed off everything else to Spanner.

F1 was built to support our Ads products. Given the nature of the ads business and the complexity of our Ads products, being able to write and run complex queries was critical. F1 made Spanner more accessible to business-logic heavy systems.

Spanner in Cloud

Fast forward, Google Cloud launched Spanner for our external customers. When it was first released, it only had SQL support to query data. It lacked INSERT, UPDATE and DELETE statements.

Given it didn’t fully a SQL database back then, it also lacked driver support for JDBC, database/sql and similar. Driver support became a possibility when Cloud Spanner implemented a Data Manipulation Language (DML) support for inserts, updates and deletes.

Today, Cloud Spanner supports both DDLs (for schema) and DMLs (for data). Cloud Spanner uses a SQL dialect used by Google. ZetaSQL, a native parser and analyzer of this dialect has open sourced a while ago. As of today, Cloud Spanner also provides a query analysis tool.

Next?

There are current challenges originated from the differences present in our SQL dialect. This is an area we are actively trying to improve. Not just we don’t want our users to deal with a new SQL flavor, the current situation is also slowing down our work on ORM integrations. Some of the ORM frameworks hardcode SQL when generating queries and giving drivers little flexibility to override behavior. In order to avoid any inconsistencies, we are trying to close the differences with popular dialects.

Dialect differences are not the only problem affecting our SQL support. One other significant gap is the lack of some of the common traditional database features. Spanner never supported features like default values or autogenerated IDs. As we are improving the dialect differences, it’s always in our radar to simultaneously address these significant gaps.

(*) The initial work on Spanner’s querying work is published as Spanner: Becoming a SQL System.

Wed, Jun 17, 2020

Update: The proposal draft has been

revisited to use brackets

instead of parenthesis. This article will be updated with

the new syntax soon.

Ian Lance Taylor and Robert Griesemer have been working on a

generics proposal

for Go for a while.

Unlike other proposals, a highly significant language

change as such generics will require experimentation and comprehensive

feedback before it can be finalized and submitted as

a formal language change proposal.

As an initial step, they have been working on an transitioning tool

so the Go users can put their ideas into test and have working

understanding of the proposal. go2go is a tool available if you

install Go from the source.

Then you can build, run and test .go2 files with generics.

$ go tool go2go

go2go <command> [arguments]

The commands are:

build translate and build packages

run translate and run list of files

test translate and test packages

translate translate .go2 files into .go files

Alternatively, they are providing the same capability from the

go2go playground, so the Go developers

can easily test their ideas without having to install Go from source.

I highly recommend you to take a look at the playground to begin.

When I was going through a draft of the proposal to put some of my

ideas into test, there were many gotchas moments I had. In this

article, I’ll share some of them to help you with your own experimentation

efforts. This article is a living document and might be updated as I discover more.

Please read

the proposal

first, the current version

of the proposal contains most of the answers.

If you have similar questions, report them to provide feedback.

Readability of this feature will be significantly important and your

feedback is very critical given generics

make languages more complicated to read.

What can be generic?

Functions can be generic, similar to the Print function below.

At the call site, Print can be called against any type

that will determine what T is.

func Print(type T)(s []T) {

for _, v := range s {

fmt.Print(v)

}

}

Print(int)([]int{1, 2, 3}) // will print 123

Structs can also rely on generic types.

A list that uses a generic item

type can be defined as:

type List(type T) struct {

list []T

}

When constructing a new List, users provide the item type. This is

how you can use the same List implementation

against different item types. In the example below we are creating

a list of integers:

list := List(int){}

Interfaces can represent cases where methods need to use

generic types. For example, a database

iterator interface is provided below. Implementors of this interface

will provide generic mechanisms that will iterate over

a user-specified type:

type Iterator(type T) interface {

Next() (T, error)

}

Currently, methods cannot have their own generic signatures.

The Do function below is accepting a generic type, C as its arguments.

The code will not compile. If you have cases where being able

to provide generic methods

type List(type T) struct {

list []T

}

func (l *List(T)) Do(type C)(c []C) {}

prog.go2:20:26: methods cannot have type parameters

Can receivers accept generic types?

Yes. If you have a generic concrete type, its recievers

can accept generic types and refer to the generic type

in their arguments. Below, the generic List can use any

user provided type as its item type. Users will be able

to call Add with the objects of type T – a type they

provided to construct List(T).

type List(type T) struct {

list []T

}

func (l *List(T)) Add(item T) {}

How to constraint a generic type?

Generic types can be of a particular interface or the empty interface.

func Print(type T)(s []T) {} // accepts every type

func Print(type T io.Writer)(s []T) {} // accepts types if T implements io.Writer

How to allocate a generic slice?

Defining, initializing or allocating generic slices are not different

than any regular slice. All the operations on T below are valid operations:

func Do(type T)(s []T) {

var t []T

t = make([]T, 10)

t = append(t, s...)

}

Can you compose generic interfaces?

Yes. Interface composition is working as expected.

If you want to provide a specialized

version of the Iterator(type T), you can defer to composition.

type Iterator(type T) interface {

Next() (T, error)

}

type IteratorCloser(type T) interface {

(Iterator(T))

Close() error

}

How to call a generic functions generically?

Calling generic functions from a generic function is pretty

straightforward. You can pass the generic type identifier, T,

to another function.

func Print(type T)(s []T) {

for _, v := range s {

PrintOne(T)(v)

}

}

func PrintOne(type T)(s T) {

fmt.Print(s)

}

At first, this confused me because argument identifiers in

Go are lower case. So, I didn’t see the T is another identifier

until I’m told so. The upside of having T in upper case is

it allows me to differentiate the generic type list from

the argument list. I hope the upper case becomes the convention.

Are type assertions possible?

Yes, but this confused me initially. If you tried to type cast

directly on the generic variable such as below, you will get an

error saying r is not an interface type, even though r implements

io.Reader.

func Do(type T io.Reader)(r T) {

switch r.(type) {

case io.ReadCloser:

fmt.Println("ReadCloser")

}

}

prog.go2:19:9: r (variable of type T) is not an interface type

But when you explicitly cast r to an interface before casting:

func Do(type T io.Reader)(r T) {

switch (interface{})(r).(type) {

case io.ReadCloser:

fmt.Println("ReadCloser")

}

}

Tue, May 19, 2020

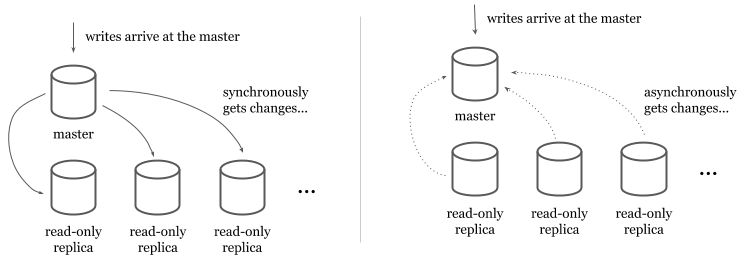

Spanner is a relational database with 99.999% availability which is roughly 5 mins a year. Spanner is a distributed system and can span multiple machines, multiple datacenters (and even geographical regions when configured). It splits the records automatically among its replicas and provides automatic failover. Unlike traditional failover models, Spanner doesn’t failover to a secondary cluster but can elect an available read-write replica as the new leader.

In relational databases, providing both high availability and high consistency in writes is a very hard problem. Spanner’s synchronous replication, the use of dedicated networking and Paxos voting provides high availability without compromising consistency.

High availability of reads vs writes

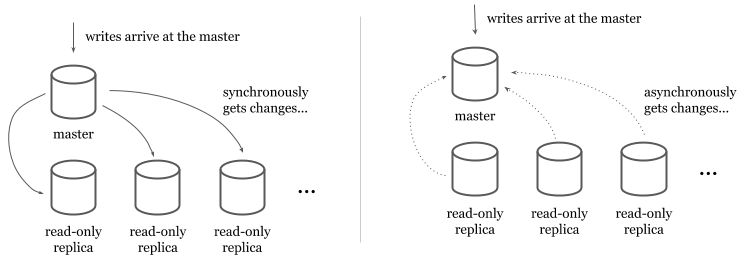

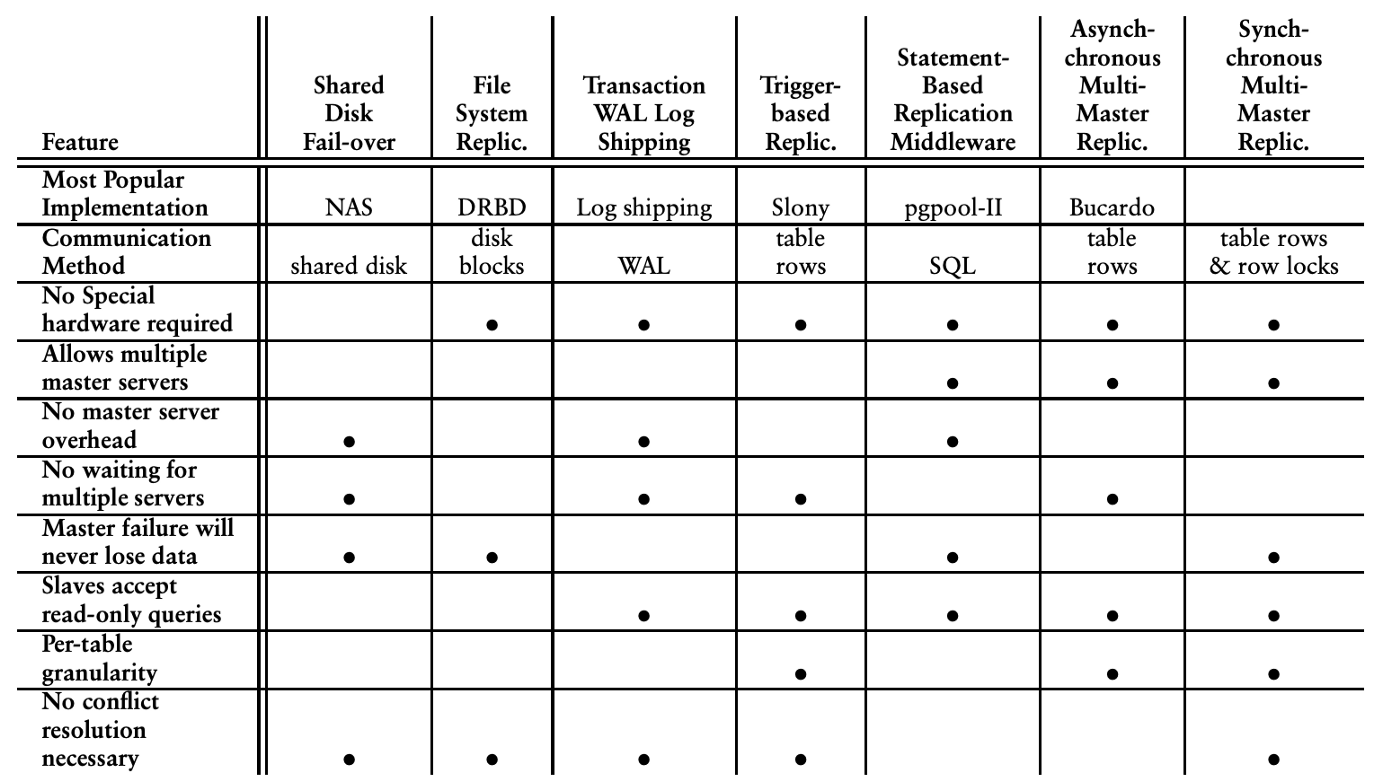

In traditional relational databases (e.g. MySQL or PostgreSQL), scaling and providing higher availability to reads is easier than writes. Read-only replicas provide a copy of the data read-only transactions can retrieve from. Data is replicated to the read-only replicas from a read-write master either synchronously or asynchronously.

In synchronous models, master synchronously writes to the read replicas at each write. Even though this model ensures that read-only replicas always have the latest data, it makes the writes quite expensive (and causes availability issues for writes) because the master has to write to all available replicas before it returns.

In asynchronous models, read-only replicas get the data from a stream or a replication log. Asynchronous models make writes faster but introduce a lag between the master and the read-only replicas.

Users have to tolerate the lag and should be monitoring it to identify replication outages. The asynchronous writes make the system inconsistent because not all the replicas will have the latest version of the until asynchronous synchronization is complete. The synchronous writes make data consistent by ensuring all replicas got the change before a write succeeds.

Horizontally scaling reads by adding more read replicas is only part of the problem.

Scaling writes is a harder problem. Having more than one master is introducing additional problems. If a master is having outage, other(s) can keep serving writes without users experiencing downtime. This model requires replication of writes among masters. Similar to read replication, multi-master replication can be implemented asynchronously or synchronously. If implemented synchronously, it often means less availability of writes because a write should replicate in all masters and they should be all available before it can succeed. As a tradeoff, multi-master replication is often implemented with asynchronous replication but it negatively impacts the overall system by introducing:

- Looser consistency characteristics that violate ACID promises.

- Increased risk of timeouts and communication latency.

- Necessity for conflict resolution between two or more masters if conflicting updates happened but not communicated.

Due to the complexity and the failure modes multi-master replication introduces, it’s not a commonly preferred way of providing high availability in practice.

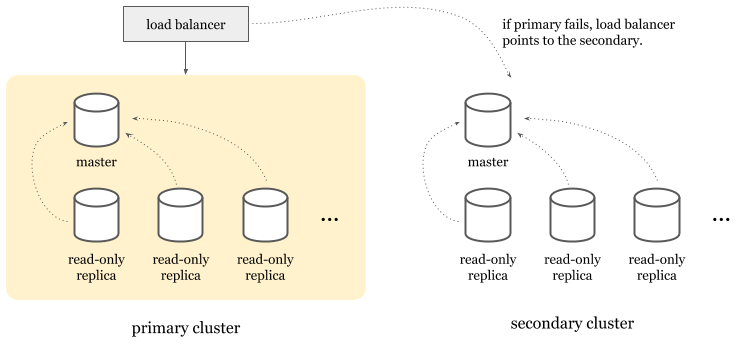

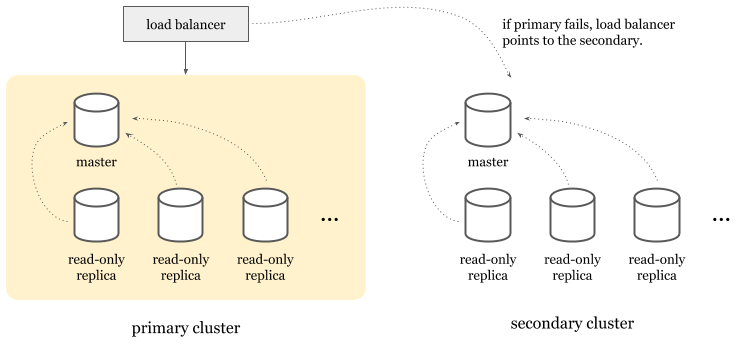

As an alternative, high-availability clusters are a more popular choice. In this model, you’d have an entire cluster that can take over when the primary master goes down. Today, cloud providers implement this model to provide high availability features for their managed traditional relational database products.

Topology

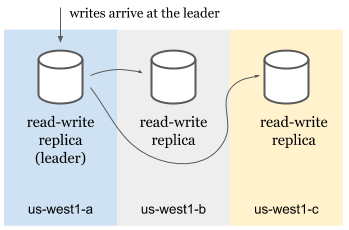

Spanner doesn’t use high availability clusters but approaches to the problem from a different angle. A Spanner cluster* contains multiple read-write, may contain some read-only and some witness replicas.

- Read-write replicas serve reads and writes.

- Read-only replicas serve reads.

- Witnesses don’t serve data but participate in leader election.

Read-only and witness replicas are only used for multi-regional Spanner clusters that can span across multiple geographical regions. Single region clusters only use read-write replicas. Each replica lives in a different zone in the region to avoid single point of failure due to zonal outages.

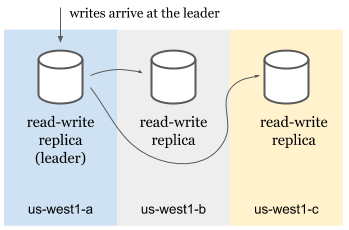

Splits

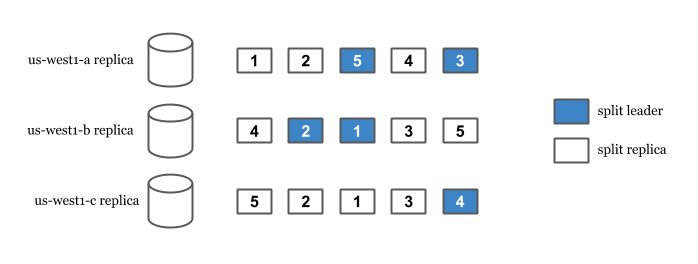

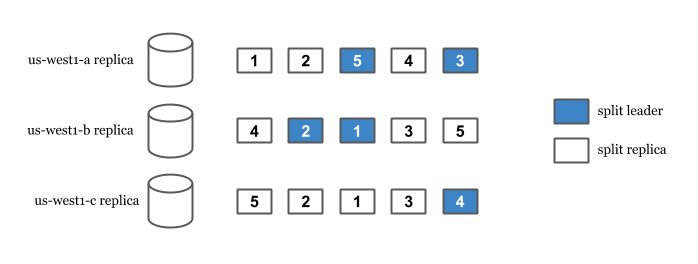

Spanner’s replication and sharding capabilities come from its splits. Spanner splits data to replicate and distribute them among the replicas. Split happens automatically when Spanner detects high read or high write load among the records. Each split is replicated and has a leader replica.

When a write arrives, Spanner finds the split the row is in. Then, we look for the leader of that split and route the write to the leader. This is true even in multi-region setups where user is geographically closer to another non-leader read-write replica. In the case of an outage of the leader, an available read-write replica is elected as the leader and user’s write is served from there.

In order for a write to succeed, a leader needs to synchronously replicate the change to the other replicas. But isn’t this impacting the availability of the writes negatively? If writes need to wait for all replicas to succeed, a replica can be a single point of failure because writes wouldn’t succeed until all replicas replicate the change.

This is where Spanner does something better. Spanner only requires a majority of the Paxos voters to successfully write. This allows writes to succeed even when a read-write replica goes down. Only the majority of the voters are required not all of the read-write replicas.

Synchronous replication

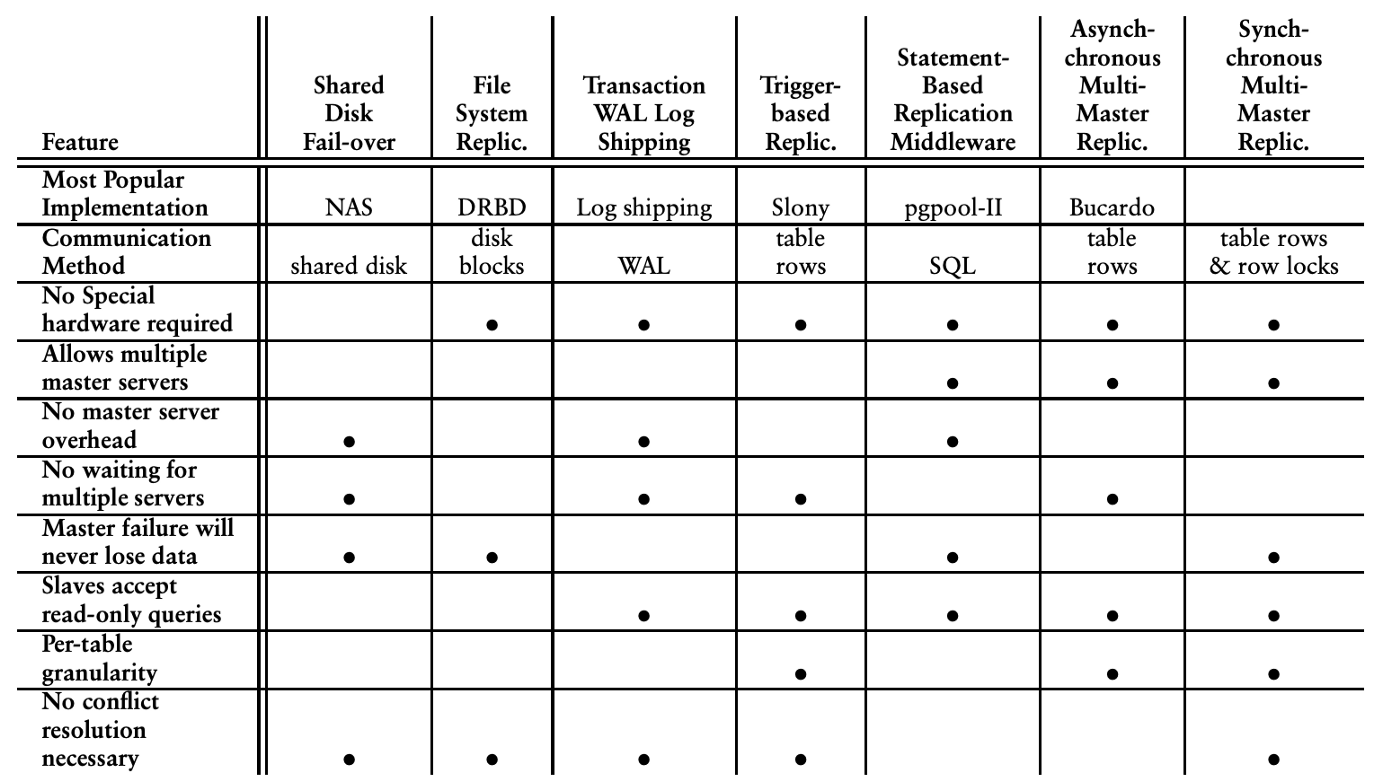

As mentioned above, synchronous replication is hard and impacts the availability of the writes negatively. On the other hand when replication happens asynchronously, they cause inconsistencies, conflicts and sometimes data loss. For example, when a master becomes unavailable due to a networking issue, it may still have committed changes but might have not delivered them to the secondary master. If the secondary master updates the same records after a failover, data loss can happen or conflict resolution may be required. PostgreSQL provides a variety of replication models with different tradeoffs. The tradeoffs summary below can give you a very high level idea of how many different concerns to worry about when designing replication models.

A summary of various PostgreSQL replication models and their tradeoffs:

Spanner’s replication is synchronous. Leaders have to synchronously communicate with other read/write replicas about the change and confirm it in order for a write to succeed.

Two-phase commit (2PC)

While writes only affecting a single split uses a simpler and faster protocol, if two or more splits are required for a write transaction, two-phase commit (2PC) is executed. 2PC is infamously known as “the anti-availability protocol” because it requires participation from all the replicas and any replica can be a single point of failure. Spanner still serves writes even if some of the replicas are unavailable, because only a majority of voting replicas are required in order to commit a write.

Network

Spanner is a distributed system and is inherently affected by problems that are impacting distributed systems in general. Networking itself is a factor of outages in distributed systems. On the other hand, Google cites only 7.6% of the Spanner failures were networking related. Spanner’s 99.999% availability is not highly affected from networking outages. This is mostly because it runs on Google’s private network. Years of operational maturity, reserved resources, having control over upgrades and hardware makes networking not a significant source of outages. Eric Brewer’s earlier article explains the role of networking in this case more in detail.

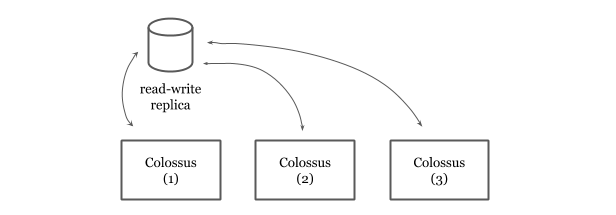

Colossus

Spanner’s durability guarantees come from Google’s distributed file system, Colossus. Spanner also mitigates some more risk by depending on Colossus. The use of Colossus allows us to have the file storage decoupled from the database service. Spanner is a “shared nothing” architecture and because any server in a cluster can read from Colossus, replicas can recover quickly from whole-machine failures.

Colossus also provides replication and encryption. If a Colossus instance goes down, Spanner can still work on the data via the available Colossus instances. Colossus encrypts data and this is why Spanner provides encryption at rest by default out of the box.

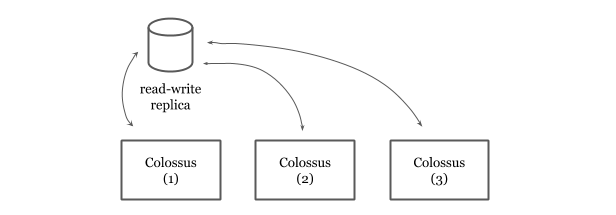

Spanner read-write replicas hands off the data to Colossus where data is replicated for 3 times. Given there are three read-write replicas in a Spanner cluster, this means the data is replicated for 9 times.

Automatic Retries

As repeatedly mentioned above, Spanner is a distributed system and is not magic. It experiences more internal aborts and timeouts than traditional databases when writing. A common strategy in distributed systems in order to deal with partial and temporary failures is to retry. Spanner client libraries provide automatic retries for read/write transactions. In the following Go snippet, you see the APIs to create a read-write transaction. The client automatically retries the body if it fails due to aborts or conflicts:

import "cloud.google.com/go/spanner"

_, err := client.ReadWriteTransaction(ctx, func(ctx context.Context, txn *spanner.ReadWriteTransaction) error {

// User code here.

})

One of the challenges of developing ORM framework support for Google Cloud Spanner was the fact most ORMs didn’t have automatic retries, therefore their APIs didn’t give developers a sense that they shouldn’t maintain any application state in the scope of a transaction. In contrast, Spanner libraries care a lot of retries and make an effort to automatically deliver them without creating extra burden to the user.

Spanner approaches to sharding and replication differently than traditional relational databases. It utilizes Google’s infrastructure and fine-tunes several traditionally hard problems to provide high availability without compromising consistency.

- (*) Google Cloud Spanner’s terminology for a cluster is an instance. I avoided to use “instance” because it is an overloaded term and might mean “replica” for the majority of the readers of this article.

- (**) The write is routed to the split leader. Read the Splits section for more.

Thu, Feb 6, 2020

Go has been continuously growing in the past decade, especially

among the infrastructure teams and in the cloud ecosystem.

In this article, we will go through some of the unique strengths

of Go in this field.

We will also cover some gotchas that may not be obvious to the users

at the first sight.

Build small binaries. Go builds small binaries. This makes it a good

language to build artifacts for containerized or serverless environments.

The final artifact with runtime dependencies can be as small as 20-25 MBs.

Runtime initialization is fast. Go’s runtime initialization is fast.

If you are writing autoscaling servers in Go, cold start can’t

be affected by Go’s runtime initialization. Go libraries and frameworks

are also trying to be on the side of fast initialization compared to

some other ecosystem such as JVM languages. The entire

ecosystem contributes to fast process start.

Build static binaries. Go programs compile into a static binary. This allows users

to simplify their final delivery process in most cases. Go binaries can be used

as a final artifact of the CI/CD systems and deployed by copying the binary

to a remote machine.

Cross compile to 64-bit Linux. Go compiler provides cross compilation. Especially

if you don’t have any CGO dependencies, you can easily cross compile to

any operating system and architecture. This allows users to build for their

production environment regardless of their build environment.

For example, regardless of your current environment,

running the following command builds for Linux 64-bit:

$ GOOS=linux GOARCH=amd64 go build

Don’t ship your toolchain. In your production environment, you don’t need

Go toolchain to run Go. The final artifact is a small executable binary. You don’t

have to care about installing and maintaining Go across your servers. Also, don’t

ship containers with Go toolchain. Instead use the toolchain to build

and copy the final binary into the production container.

Rebuild and redeploy with Go releases. Go only supports the last two major

versions. Just because Go runtime is compiled in the body,

with each Go release, rebuild and redeploy your production services.

At Google, we use the release candidates to build production services as soon as

there is an RC version. You can use the RC version for production services, or at

least push to canary with the RC version. If you see an unexpected behavior,

immediately file an issue.

The go tool can print the Go version used to build a binary:

$ go version <binary>

<binary>: go1.13.5

You can additionally use tools like gops

to list and report the Go versions of the binaries currently running on your system.

Embed commit versions into binaries. Embed the revision numbers

when you are building a Go binary.

You can also embed the build constraint and other

options used when building.

debug.BuildInfo

also provides information about the module as well as the dependencies.

Alternatively, go command can report module information and the dependencies:

$ go version -m dlv

dlv: go1.13.5

path github.com/go-delve/delve/cmd/dlv

mod github.com/go-delve/delve v1.3.2 h1:K8VjV+Q2YnBYlPq0ctjrvc9h7h03wXszlszzfGW5Tog=

dep github.com/cosiner/argv v0.0.0-20170225145430-13bacc38a0a5 h1:rIXlvz2IWiupMFlC45cZCXZFvKX/ExBcSLrDy2G0Lp8=

dep github.com/mattn/go-isatty v0.0.3 h1:ns/ykhmWi7G9O+8a448SecJU3nSMBXJfqQkl0upE1jI=

dep github.com/peterh/liner v0.0.0-20170317030525-88609521dc4b h1:8uaXtUkxiy+T/zdLWuxa/PG4so0TPZDZfafFNNSaptE=

dep github.com/sirupsen/logrus v0.0.0-20180523074243-ea8897e79973 h1:3AJZYTzw3gm3TNTt30x0CCKD7GOn2sdd50Hn35fQkGY=

dep github.com/spf13/cobra v0.0.0-20170417170307-b6cb39589372 h1:eRfW1vRS4th8IX2iQeyqQ8cOUNOySvAYJ0IUvTXGoYA=

dep github.com/spf13/pflag v0.0.0-20170417173400-9e4c21054fa1 h1:7bozMfSdo41n2NOc0GsVTTVUiA+Ncaj6pXNpm4UHKys=

dep go.starlark.net v0.0.0-20190702223751-32f345186213 h1:lkYv5AKwvvduv5XWP6szk/bvvgO6aDeUujhZQXIFTes=

dep golang.org/x/arch v0.0.0-20171004143515-077ac972c2e4 h1:TP7YcWHbnFq4v8/3wM2JwgM0SRRtsYJ7Z6Oj0arz2bs=

dep golang.org/x/crypto v0.0.0-20180614174826-fd5f17ee7299 h1:zxP+xTjjk4kD+M5IFPweL7/4851FUhYkzbDqbzkN1JE=

dep golang.org/x/sys v0.0.0-20190626221950-04f50cda93cb h1:fgwFCsaw9buMuxNd6+DQfAuSFqbNiQZpcgJQAgJsK6k=

dep gopkg.in/yaml.v2 v2.2.1 h1:mUhvW9EsL+naU5Q3cakzfE91YhliOondGd6ZrsDBHQE=

FaaS is Go binary as a service. Function-as-a-service products such as

Google Cloud Functions or AWS Lambda serves Go functions. But in fact, they are

building a user function into a binary and serve the binary. This means you

have to organize and build packages acknowledging this fact. Because the final

binary is not forked for every incoming request but is being reused:

- You may have data races if you access to common resources from multiple functions.

- You may need to use

sync.Once in the function to initialize some

of the resources if you need the incoming request to initialize.

- Background goroutines may need to keep working even after the function is finished and

binary is about to be terminated. You may need to flush data manually or gradually

shutdown background routines.

- Providers are not consistent about signaling the Go process before a shutdown.

Expect hard terminations as soon as your function exits.

- You may want to use the incoming request’s context for calls initiated in

the function. In such cases, being able to reuse resources are getting harder.

Gracefully reject incoming requests. When auto scaling down or shutting down new

resources, start rejecting incoming requests to the Go program. http.Server provides

Shutdown for this purpose.

Report the essential metrics. Go runtime and diagnostics tools provide a variety

of essential metrics from the Go programs. Report them to your monitoring systems.

Some of these metrics can be accessible by

runtime.NumGoroutine, runtime.NumThreads, runtime.NumCGOCalls and

runtime.ReadMemStats.

See instrumentation libraries such as Prometheus’ Go library

as a reference on what can be exported.

Print scheduling and GC events. Go can optionally print out scheduling

and GC related events to the standard output. When in production,

you can use the GODEBUG

environmental variable to print out verbose insights from the runtime.

The following command will start the binary and print GC events

as well as the state of the current utilization at every 5000 ms

to the standard out:

$ GODEBUG=gctrace=1,schedtrace=5000 <binary>

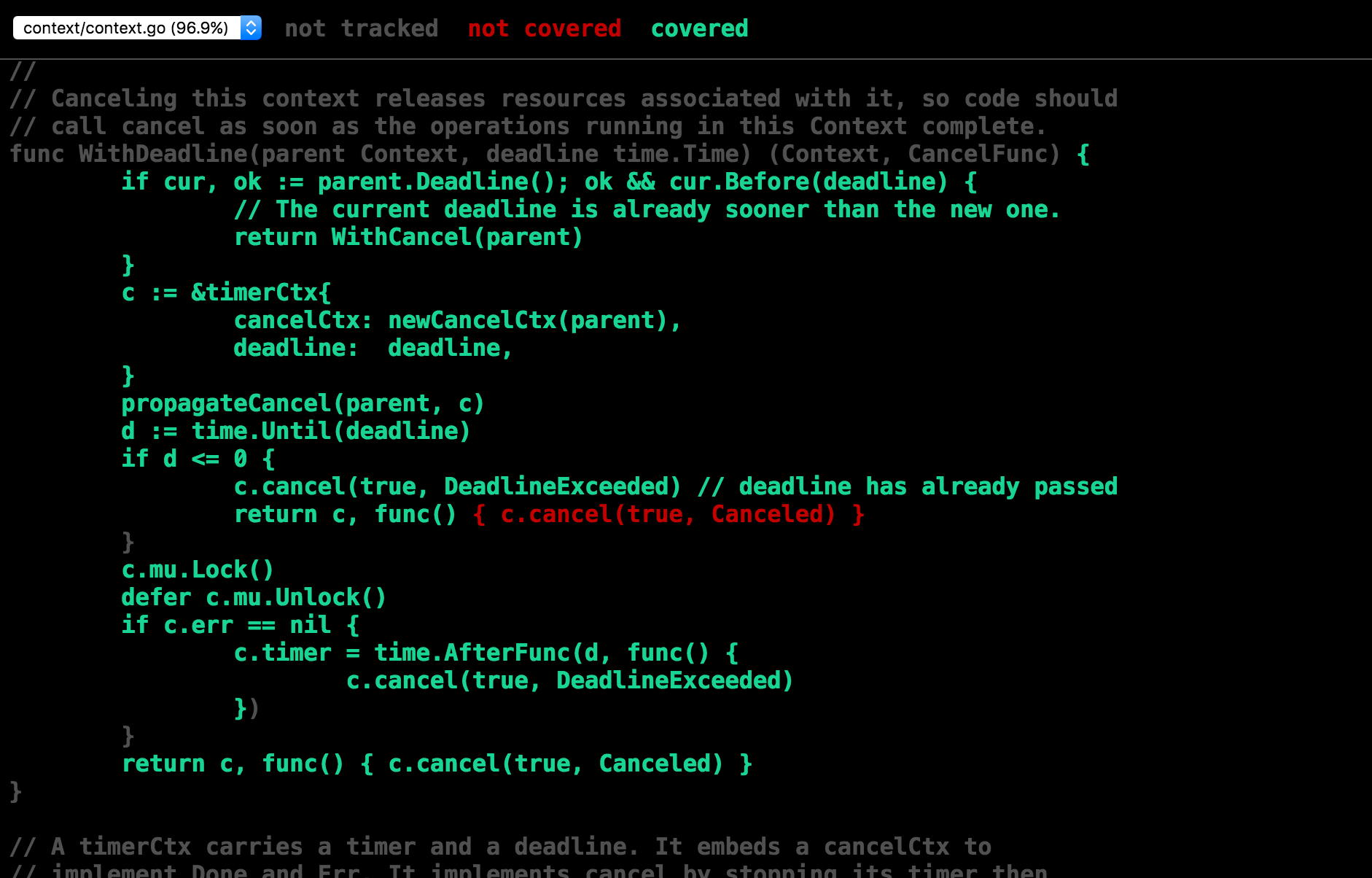

Propagate the incoming context. Go allows propagating the context in the process

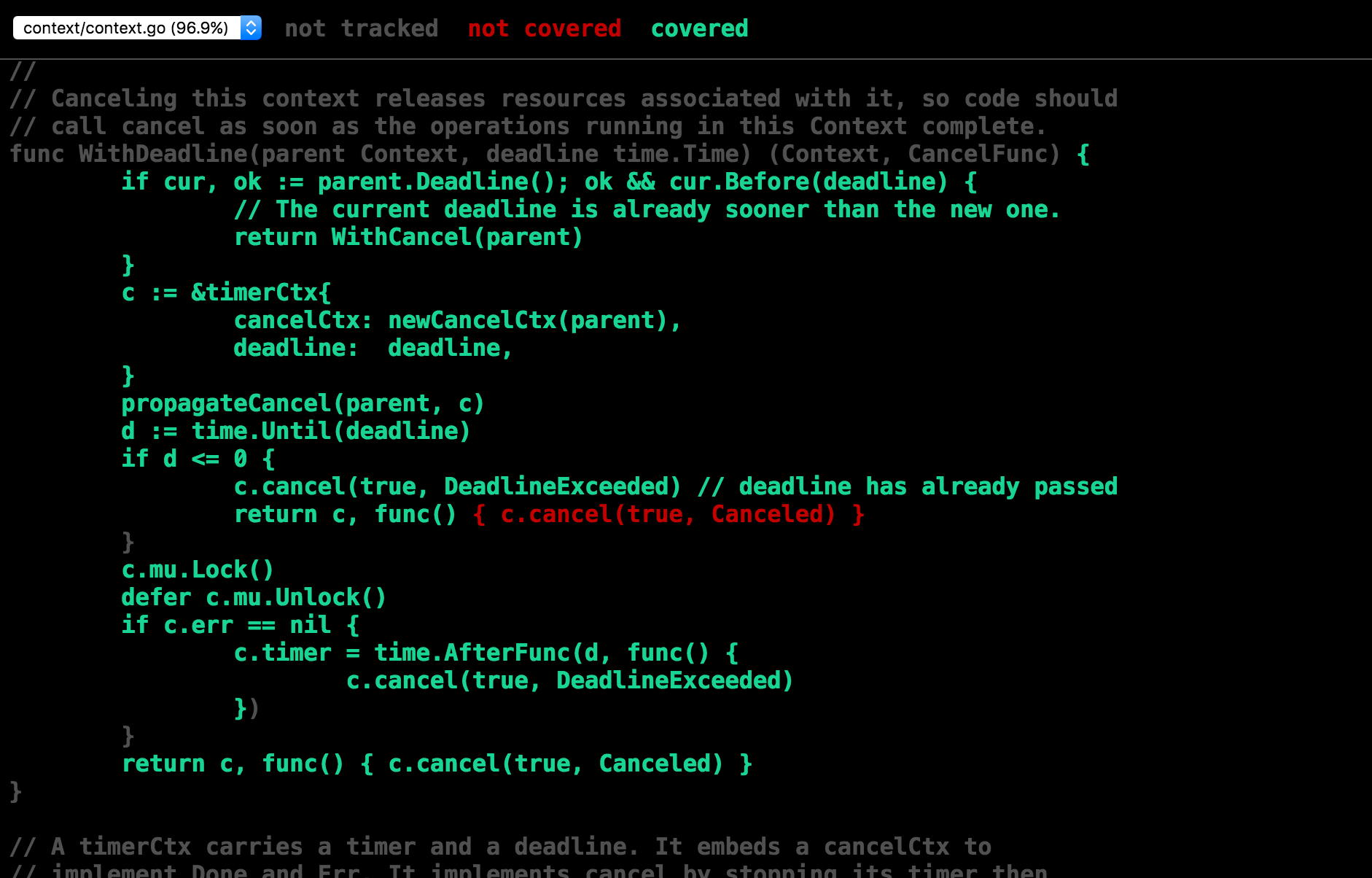

via context.Context.

You can also signal cancellation or timeout decisions

to other goroutines using context. You can use context to propagate values

such as trace/request IDs or other metadata relevant in the critical path.

You can log with context key/values where it applies.

If you have an incoming request context, keep propagating it.

For example:

http.HandleFunc("/", func(w http.ResponseWriter, r *http.Request) {

// use r.Context() for the calls made here.

})

Continuously profile in production. Go uses pprof which is

a lightweight profiling collection mechanism. It only adds a single-digit

percentage overhead to the execution when enabled. You can utilize this

strength by collection profiles from production systems and understanding

the fleet-wide hotspots to optimize. See

Continuous Profiling of Go programs for more insights

and a reference implementation of a continuous profiling product.

pprof can symbolize the profiling

data by incorporating the binary. If you are collecting

profiles from production, you’d like to store profiling data with symbols.

Even though there is no good standard library function for this task,

there is an existing reference that

can be adopted.

Dump debuggable postmortems. Go allows post-mortem debugging. When running

Go in production, core dumps allow you to retrospectively investigate why binaries

crash. If you have Go programs constantly crashing, you can retrieve their core

dumps and understand why the crashed and which state they were in. You can

also utilize core dumps to debug in production by taking a snapshot (a core dump)

and using your debugger. See core dumps for more.

Mon, Jan 20, 2020

Go’s defer keyword allows us to schedule a function to run

before a function returns. Multiple functions

can be deferred from a function. defer is often used

to cleanup resources, finish function-scoped tasks, and

similar.

Deferring functions are great for maintability.

By deferring, for example, we reduce the risk

of forgetting to close the file

in the rest of the program:

func main() {

f, err := os.Open("hello.txt")

if err != nil {

log.Fatal(err)

}

defer f.Close()

// The rest of the program...

}

Deferring helps us by delaying the execution of the Close method

while allowing us to type it when we have the right context.

This is how deferred functions also help the readability

of the source code.

How defer works

Defer handles multiple functions by stacking them hence running

them in LIFO order. The more deferred functions you have,

the larger the stack will be.

func main() {

for i := 0; i < 5; i++ {

defer fmt.Printf("%v ", i)

}

}

The above program will output “4 3 2 1 0 ” because the last

deferred function will be the first one to be executed.

When a function is deferred, the variables accessed by it

are stored as its arguments. For each deferred function,

compiler generates a runtime.deferproc call at the call site and

call into runtime.deferreturn at the return point of the function.

0: func run() {

1: defer foo()

2: defer bar()

3:

4: fmt.Println("hello")

5: }

The compiler will generate code similar to below for the program above:

runtime.deferproc(foo) // generated for line 1

runtime.deferproc(bar) // generated for line 2

// Other code...

runtime.deferreturn() // generated for line 5

Defer used to require two expensive runtime calls

explained above. This made deferring functions

to be significantly more expensive than non-deferred functions.

For example, consider to lock and unlock a sync.Mutex

deferred and not-deferred.

var mu sync.Mutex

mu.Lock()

defer mu.Unlock()

The program above will work 1.7x slower than the non-deferred

version. Even though it only takes ~25-30 nanoseconds to lock and

unlock a mutex by deferring, it makes a difference in large

scale use or in cases where a function call need to be completed

under 10 nanoseconds.

BenchmarkMutexNotDeferred-8 125341258 9.55 ns/op 0 B/op 0 allocs/op

BenchmarkMutexDeferred-8 45980846 26.6 ns/op 0 B/op 0 allocs/op

This overhead is why Go developers started to avoid

defers in certain cases to improve performance.

Unfortunately this situation make Go developers

compromise readability.

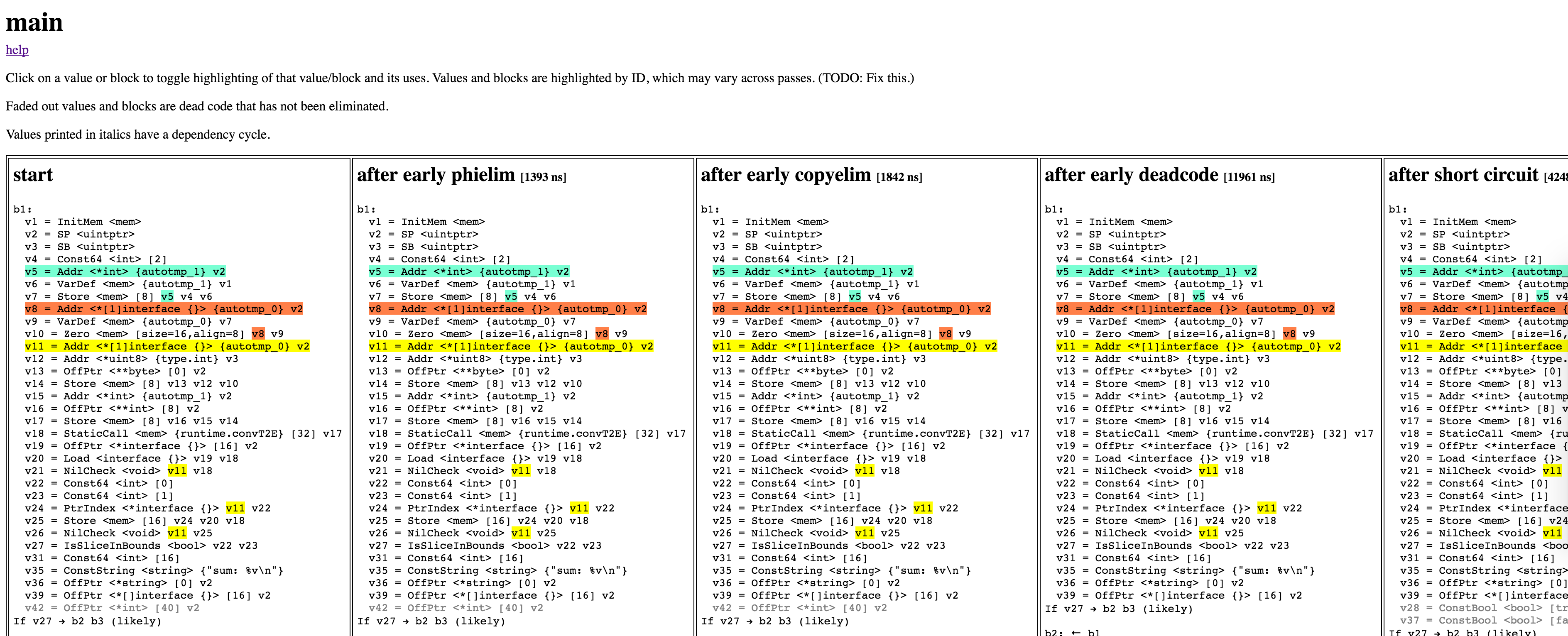

Inlining deferred functions

In the last few versions of Go, there have been gradual improvements

to defer’s performance. But with Go 1.14, some common cases

will see a highly significant performance improvement. The compiler

will generate code to inline some of the

deferred functions at return points. With this improvement,

calling into some deferred functions will be only as expensive as

making a regular function call.

0: func run() {

1: defer foo()

2: defer bar()

3:

4: fmt.Println("hello")

5: }

With the new improvements, above code will generate:

// Other code...

bar() // generated for line 5

foo() // generated for line 5

It is possible to do this improvement only in static cases. For example,

in a loop where the execution is determined by the input size dynamically,

the compiler doesn’t have the chance to generate code to inline all

the deferred functions. But in simple cases

(e.g. deferring at the top of the function or in conditional blocks

if they are not in loops), it is

possible to inline the deferred functions. With 1.14, easy cases will be

inlined and runtime coordination will be only required if the compiler

cannot generate code.

I already tried the Go 1.14beta with

the mutex locking/unlocking example above. Deferred and non-deferred

versions perform very similarly now:

BenchmarkMutexNotDeferred-8 123710856 9.64 ns/op 0 B/op 0 allocs/op

BenchmarkMutexDeferred-8 104815354 11.5 ns/op 0 B/op 0 allocs/op

Go 1.14 is a good time to reevaluate deferring if you avoided defers

for performance gain.

If you are looking for more about this improvement, see the

Low-cost defers through inline code proposal and

GoTime’s recent episode on defer with

Dan Scales.

Disclaimer: This article is not peer-reviewed but thanks to Dan Scales

for answering my questions while I was investigating this improvement.

Mon, Nov 18, 2019

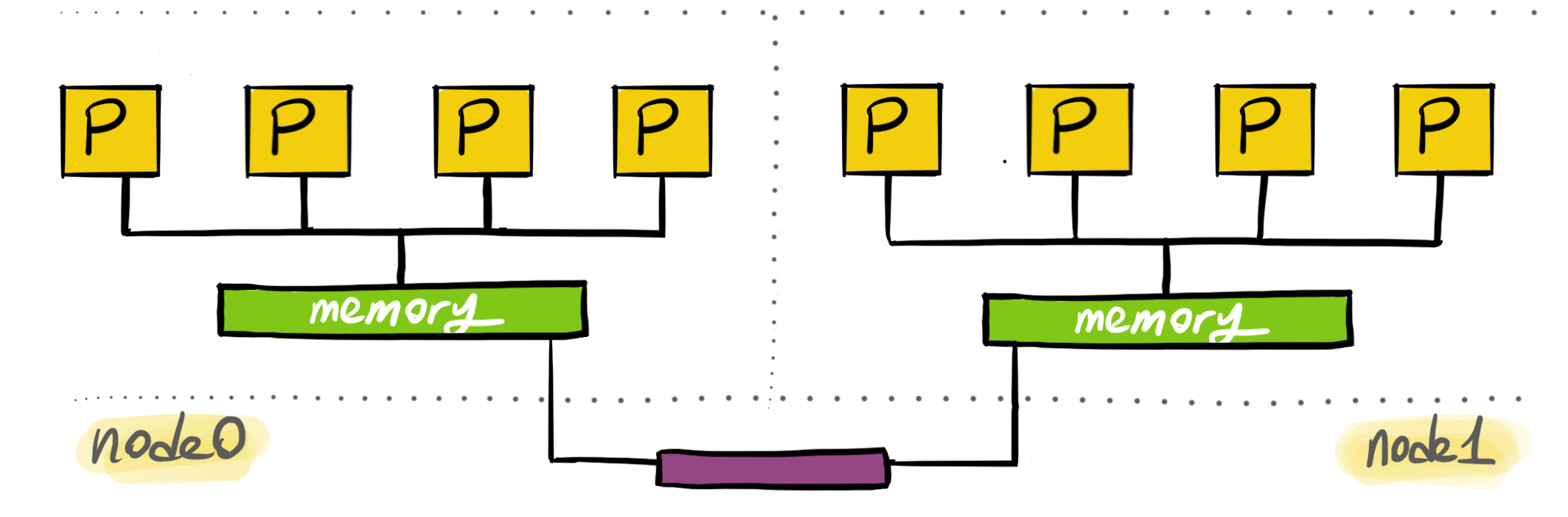

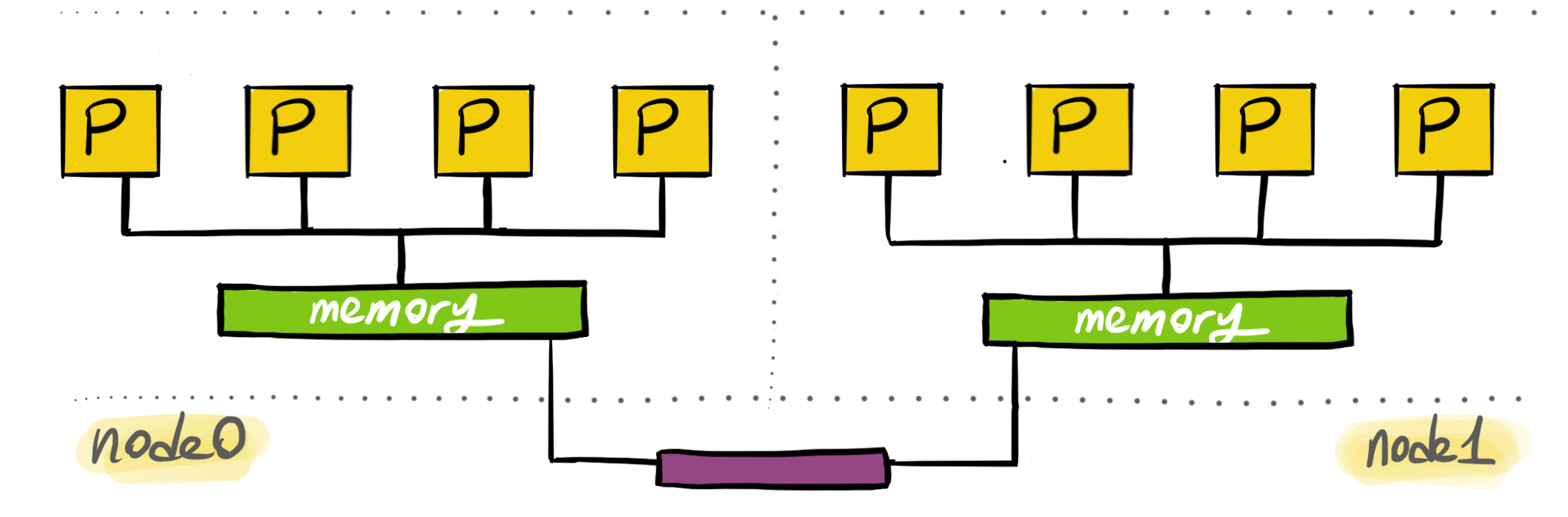

Non-uniform memory access (NUMA) is an approach to optimize memory access time

in multi-processor architectures. In NUMA architectures, processors can access

to the memory chips near them instead of going to the physically distant ones.

In the distant past CPUs generally ran slower than the memory.

Today, CPUs are quite faster than the memory they use. This became a problem

because processors constantly started to wait for data to be retrieved from the

memory. As a result of such data starvation problems, for example,

CPU caches became a popular addition to modern computer architecture.

With multi processors architectures, the data starvation problem became worse.

Only one processor can access the memory at a time. With multiple processors trying

to access to the same memory chip resulted in further wait times for the processors.

NUMA is an architectural design and a set of capabilities trying to address problems

such as:

- Data-intensive situations where processors are starving for data.

- Multi-processor architectures where processors non-optimally race for memory access.

- Architectures with large number of processors where physical distance to memory is a problem.

Nodes

NUMA architectures consist of an array of processors closely located to a memory.

Processors can also access the remote memory but the access is slower.

In NUMA, processors are grouped together with local memory. These groups

are called NUMA nodes. Almost all modern processors also contains a non-shared memory

structure, CPU caches. Access to the caches are the fastest, but shared memory is

required if data needs to be shared. In shared cases, access to the local memory

is the fastest.

Processors also have access to remote memory.

The difference is that accessing remote memory is slower

because the interconnect is slower.

NUMA architectures provide APIs for the users to set fine-tuned affinities. The

main way how this works is to allocate memory for a thread on its local node.

Then, make the thread running in the same node. This will allow the optimal

data access latency for memory.

Linux users might be familiar with sched_setaffinity(2) which allows its users to

lock a thread on a specific processor. Think about NUMA APIs as a way to

lock a thread to a specific set of processors and memory. Even if the thread is preempted,

it will only start rerunning on a specific node where data locality is optimal.

NUMA in Linux

Linux has NUMA support for a while. NUMA supports provides

tools, syscalls and libraries.

numactl allows to set affinities and gather information about

your existing system. To gather information about the NUMA nodes,

run:

$ numactl --hardware

You can set affinities of a process you are launching. The following

will set the CPU node affinity of the launching process

to node 0 and will only allocate from 0.

$ numactl --cpubind=0 --membind=0 <cmd>

You can allocate memory preferable on the given node. It will still

allocate memory in other nodes if memory can’t be allocated on the

preferred node:

$ numactl --preferred=0 <cmd>

You can launch a new process to allocate always from the local memory:

$ numactl --localalloc <cmd>

You can selectively only execute on the given CPUs. In the following case,

the new process will be executed either on processor 0, 1, 2, 5 or 6:

$ numactl --physcpubind=0-2,5-6 <cmd>

See numactl(8) for the full list of capabilities.

Linux kernel supports NUMA architectures by providing some syscalls. From user programs,

you can also call the syscalls. Alternatively, numa

library provides an API for the same capabilities.

Optimizations

Your programs may need NUMA:

- If it’s clear from the nature of the problem that memory/processor affinity is needed.

- If you have been analyzing CPU migration patterns and locking a thread to a node can improve the performance.

Some of the following tools could be useful diagnosing the need for optimizations.

numastat(8) displays some

hits or misses per node. You can see if memory is allocated as intended on the

prefered nodes. You can also see how often memory is allocated on local vs remote.

numatop allows you to inspect the local vs remote

memory access stats of the running processes.

You can see the distance between nodes via numactl --hardware to avoid allocating from distant nodes.

This will allow you to optimize to avoid the overhead of the interconnect.

If you need to further analyze thread scheduling and migration patterns, tracing tools such as

Schedviz might be useful to visualize kernel scheduling decisions.

One more thing…

Recently, on the Go Time podcast, I briefly mentioned how I’m directly calling into

libnuma from Go programs for NUMA affinity. I think, everyone was thinking

I was joking. In Go, runtime scheduler doesn’t allow you to have precise

control or doesn’t expose the underlying processor/architecture. If you are running

highly CPU intensive goroutines with strict memory access requirements, NUMA bindings

might be an option.

One thing you need to make sure is to lock the OS thread, so the goroutine is kept

being scheduled to run on the same OS thread which can set its affinity via NUMA APIs.

DON’T TRY THIS HOME (unless you know what you are doing):

import "github.com/rakyll/go-numa"

func main() {

if !numa.IsAvailable() {

log.Fatalln("NUMA is not available on this machine.")

}

// Make sure the underlying OS thread

// doesn't change because NUMA can only

// lock the OS thread to a specific node.

runtime.LockOSThread()

// Runs the current goroutine always in node 0.

numa.SetPreferred(0)

// Allocates from the node's local memory.

numa.SetLocalAlloc()

// Do work in this goroutine...

}

Please don’t use this if you are 100% sure what you are doing. Otherwise,

it will limit the Go scheduler and your programs will

see a significant performance penalty.

Wed, Dec 27, 2017

It is real struggle to work with a new language,

especially if the type doesn’t resemble what you have previously

seen. I have been there with Go and lost my

interest in the language when it first came out due to the

reason I was pretending it is something I already knew.

Go is considered as an object-oriented language even though it lacks type hierarchy.

It has an unconventional type system. It is expected

to do the things differently in this language given the traditional paradigms are not always going to help the Go users.

This article contains a few gotchas.

Program flow first, types later

In Go, program flow

and behavior are not tightly coupled to the abstractions. You don’t start programming by thinking about the types but rather the flow/behavior. As you need to represent your data

in more sophisticated ways, you start introducing your types.

More recently Rob Pike shared his thoughts on the separation

of data and behavior:

… the more important idea is the separation of concept:

data and behavior are two distinct concepts in Go, not

conflated into a single notion of “class”.

– Rob Pike

Go has a strong emphasis on the data model. Structs (which are

aggregate types) provide a light-weight way to represent data.

The lack of type hierarchy helps structs to keep being thin,

structs never represent the layers and layers of inherited

behavior but only the data fields. This makes them closer to the

data structures they represent rather than the behavior

they are additionally providing.

Embedding is not inheritance

Code reuse is not provided by type hierarchy but via composition.

Language ecosystems with classical

inheritance is often suffering from excessive level of indirection

and premature abstractions based on inheritance which later makes the code complicated and unmaintainable.

Instead of providing type hierarchy, Go allows composition

and dispatching of the methods via interfaces. The language

allows embedding and most people

assume the language has some limited

support for sub-classing types – this is not true.

Embedding is really not very different than having a regular field

but allows you to embed the methods on the embedded type directly

into the new type.

Consider the following struct:

type File struct {

sync.Mutex

rw io.ReadWriter

}

Then, File objects will directly have access to sync.Mutex methods:

f := File{}

f.Lock()

It is no different than providing Lock and Unlock methods from File

and make them operate on a sync.Mutex field. This is not sub-classing.

Polymorphism

Due to lack of sub-classing, polymorphism in Go is achieved

only with interfaces. Methods are dispatched during runtime

depending on the concrete type.

var r io.Reader

r = bytes.NewBufferString("hello")

buf := make([]byte, 2048)

if _, err := r.Read(buf); err != nil {

log.Fatal(err)

}

Above, r.Read will be dispatched to (*Buffer).Read.

Please note that embedding is not sub-classing,

embedding types can not be assigned to what they

are embedding. The following code is not

going to compile:

type Animal struct {}

type Dog struct {

Animal

}

func main() {

var a Animal

a = Dog{}

}

No explicit interface implementations

Go doesn’t have an implements that explicitly allowing you to

tell you are implementing an interface. It assumes you are implementing an interface if the method signature matches the

one in the interface definition.

How does this scale? Is it possible to accidentally implement

interfaces you didn’t mean to implement? Although mechanically

possibly, it has never been an issue for our user base to pass

an implementation of one interface mistakenly for another one.

Interfaces often are widely different, or

it is sign there might not be a need of a second interface

if two interfaces are quite similar.

We have a culture of not introducing new interfaces but prefer

to use the ones provided by the standard library or use the established ones from the community.

This culture also reduces the number of similar

looking interfaces.

No header files or no culture of “let’s introduce interfaces first”.

If you don’t want to provide multiple implementations of the same

high-level behavior, you don’t introduce interfaces.

Naming patterns based on other languages'

dependency inversion conventions are anti-patterns in Go.

Naming styles such the following don’t fit

into the Go ecosystem.

type Banana interface {

//...

}

type BananaImpl struct {}

One more thing…

Go prefer small interfaces.

You can always embed interfaces later but

you cannot decompose large ones.

No constructors

Go doesn’t have constructors hence doesn’t allow you

to override the default constructor. Default

construction always result in zero-valued fields.

Go has a philosophy to use zero-value to represent the

default. Utilize zero-value as much as possible to provide

the default behavior.

Some structs may require more work such as validation,opening a

connection, etc before becoming useful to the user. In such cases,

we prefer initialization functions.

func NewRequest(method, url string, body io.Reader) (*Request, error)

NewRequest validates method and url, sets up the right internals

to read from the given body and returns a request.

Nil receivers

Nil is a value, nil value of a type can implement behavior.

Developers don’t have to provide concrete types for

noop implementations.

If you are introducing an interface, only to provide a noop

implementation of your concrete type, don’t do it.

Below, event logging will be a noop for the nil values.

type Event struct {}

func (e *Event) Log(msg string) {

if e == nil {

return

}

// Log the msg on the event...

}

Then user can use the nil value for the noop behavior:

var e *Event

e.Log("this is a message")

No generics

Go doesn’t have generics.

There are ongoing conversations happening on what kind of generics

would be a good fit for Go. Given the unique type system, it is not

easy to just copy an existing approach and assume it will be

useful for the majority and will be

orthogonal to the existing language features.

Go Experience Reports are waiting for user input to see what kind of use cases could have

helped the Go users if Go had generics.

Tue, Oct 10, 2017

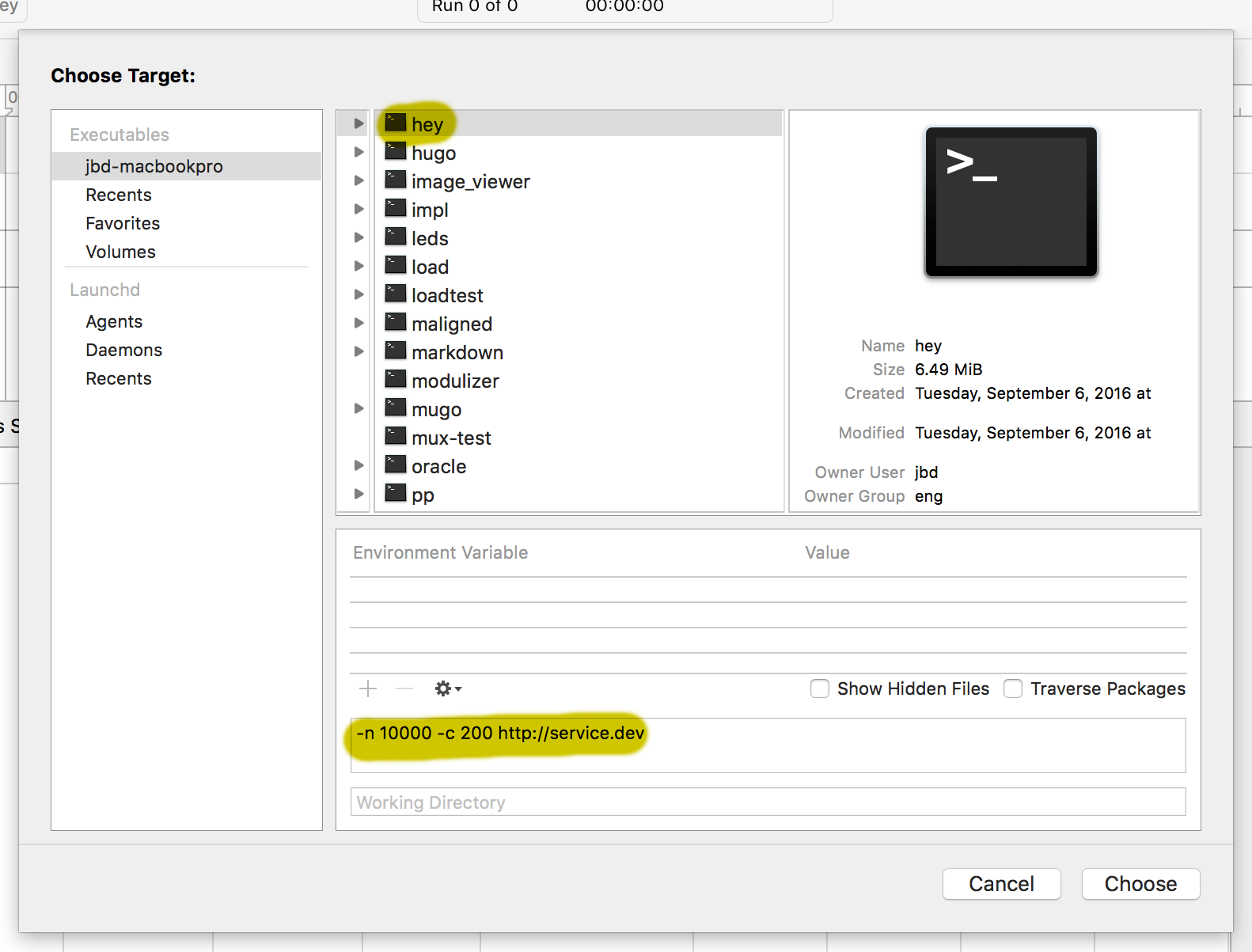

pprof now is coming with a Web UI. In order to try it out,

go get the pprof tool:

$ go get github.com/google/pprof

The tool launches a web UI if -http flag is provided. For example,

in order to launch the UI with an existing profile data, run the

following command:

$ pprof -http=:8080 profile.out

You can focus, ignore, hide, and show with regexp. As well as clicking

on the boxes and using the refining menu also works:

You can peek, list, and disassemble and box. Especially listing is

a frequently used feature to understand the cost by line:

You can also have the regular listing view and use regexp filtering

to focus, ignore, hide and show.

Recently, web UI added support for flame graphs. The pprof tool is now able to display flame

graphs without any external dependencies!

The Web UI is going to be in Go 1.10, but you can try it by go getting

from head and report bugs and improvements!

Tue, Jul 18, 2017

Note: This article contains non-finalized ideas; we may end up not implementing any of this but ideally we should do work towards the direction explained here.

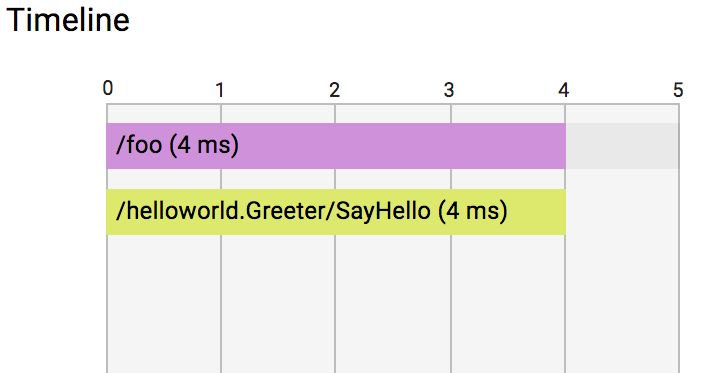

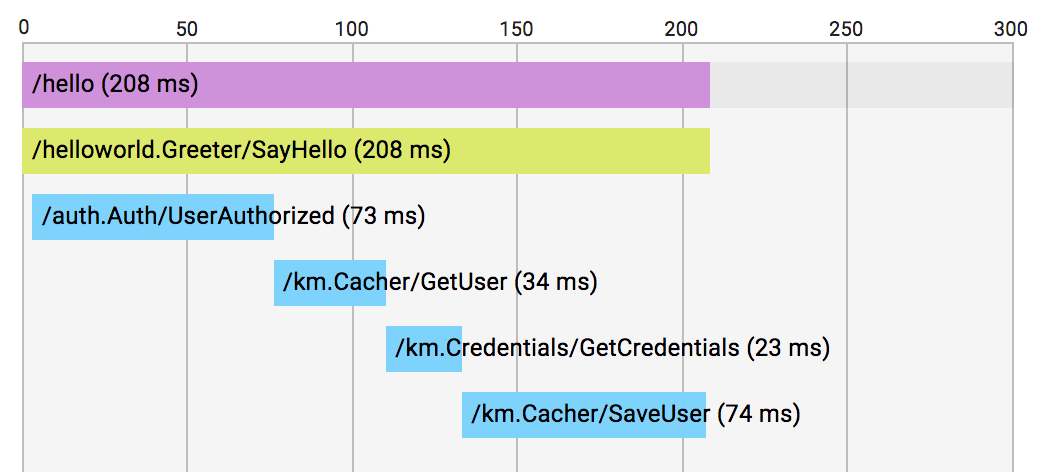

Go is the language to write servers, Go is the language to write microservices. Yet, we haven’t done much in the past for latency analysis and observability/diagnostics of request/RPC performance.

GopherCon 2017 was an opportunity for me to discuss our roadmap for latency analysis. I have talked to many whose main job is to provide instrumentation solutions to the ecosystem.

A few common problems have been pointed out by pretty much everyone I talked to:

- Instrumentation requires manual labor. Go code cannot be auto-instrumented by intercepting calls.

- Lack of a standard library package; third party packages cannot provide out-of-the-box instrumentation without external dependencies.

- Dropped traces; libraries don’t know how to propagate traces to the outside world. We need a

context.Context key to propagate traces and be able to discover the current trace by looking into the incoming context.

- Lack of standard library support; e.g. packages like

database/sql can be instrumented to create spans for each ExecContext if the given context has already has a trace ID.

- Lack of diagnostics data available from runtime per trace ID. It would be ideal to be able to record runtime events (e.g. scheduling events) with trace IDs and then pull them to further investigate low-level runtime events happened in the lifetime of a request.

Apart from the Go-specific issues, we often came back to the problem of the wild fragmentation in the tracing community and how the lack of the compatibility among tracing backends damage the possibility of establishing more in the library space.

There is not much we can do beyond the boundaries of Go other than advocating for a requirement to fix fragmentation which I already personally do.

Instrumentation

We are currently not interested to solve (1) in a fashion other languages do by providing primitives that can intercept every call. Initially there is a lot to be done by creating a common instrumentation library and putting manual spans in place. A common instrumentation layer also solves the problems explained at (2) and (3).

To address these items, we will propose a package with a trace context representation, FromContext/NewContext to propagate trace context via context.Context, and a small API to create/end/annotate spans.

Users will be able to start and stop trace collection in a Go program dynamically; and export collected trace data.

Users will need to write transformation code if they would like to follow an existing distributed trace (e.g. an existing Zipkin trace propagated via an incoming HTTP request’s header).

Once we establish a package, we can revise the standard library packages to see where we can inject out-of-the-box instrumentation. Some existing ideas:

database/sql: A span can be created for each ExecContext and finished when exec is completed to measure latency.os/exec: A span can be created for CommandContext to measure command exec latency.net/http: http.Transport can create spans for outgoing requests.

The next steps for net/http is to be able to propagate traces via http.Request. Ideally we want http.Transport to inject the right trace context header to the outgoing requests and http.Handlers to extract trace contexts into req.Context. The wild fragmentation in the tracing backends don’t help us much here. Each backend requires a different encoding/decoding to serialize/deserialize trace contexts and different HTTP headers to put them in place. There is an ongoing effort to unify things in this area and we will wait for it rather than trying to meet the backend-specific requirements.

There is also an experimental work to annotate runtime events recorded by the execution tracer with trace IDs, which will address the basic requirements of (5). If you need to collect more precise data on what else is happening in the lifetime of a trace, you will be optionally record runtime events and attach them to the current trace.

Visualization

Nothing has been planned so far to visualize the per-node data. We expect the exported data can be transformed into the data format of the user’s existing distributed tracing backend and visualized there. For those who are looking for a local env setup, I suggest Zipkin given it is very easy to run it locally as a standalone service. I am in also favor of maintaining high-quality transformation drivers for Zipkin or OpenTracing somewhere outside of the standard lib.

Conclusion

We have clearer idea what we want to achieve in the scope of Go for latency profiling. The next steps are converting these ideas into proposals and discuss them with the broader Go community, give feedback to the tracing community for the standardization efforts, and create awareness of these concepts and tools.

Sun, Jul 16, 2017

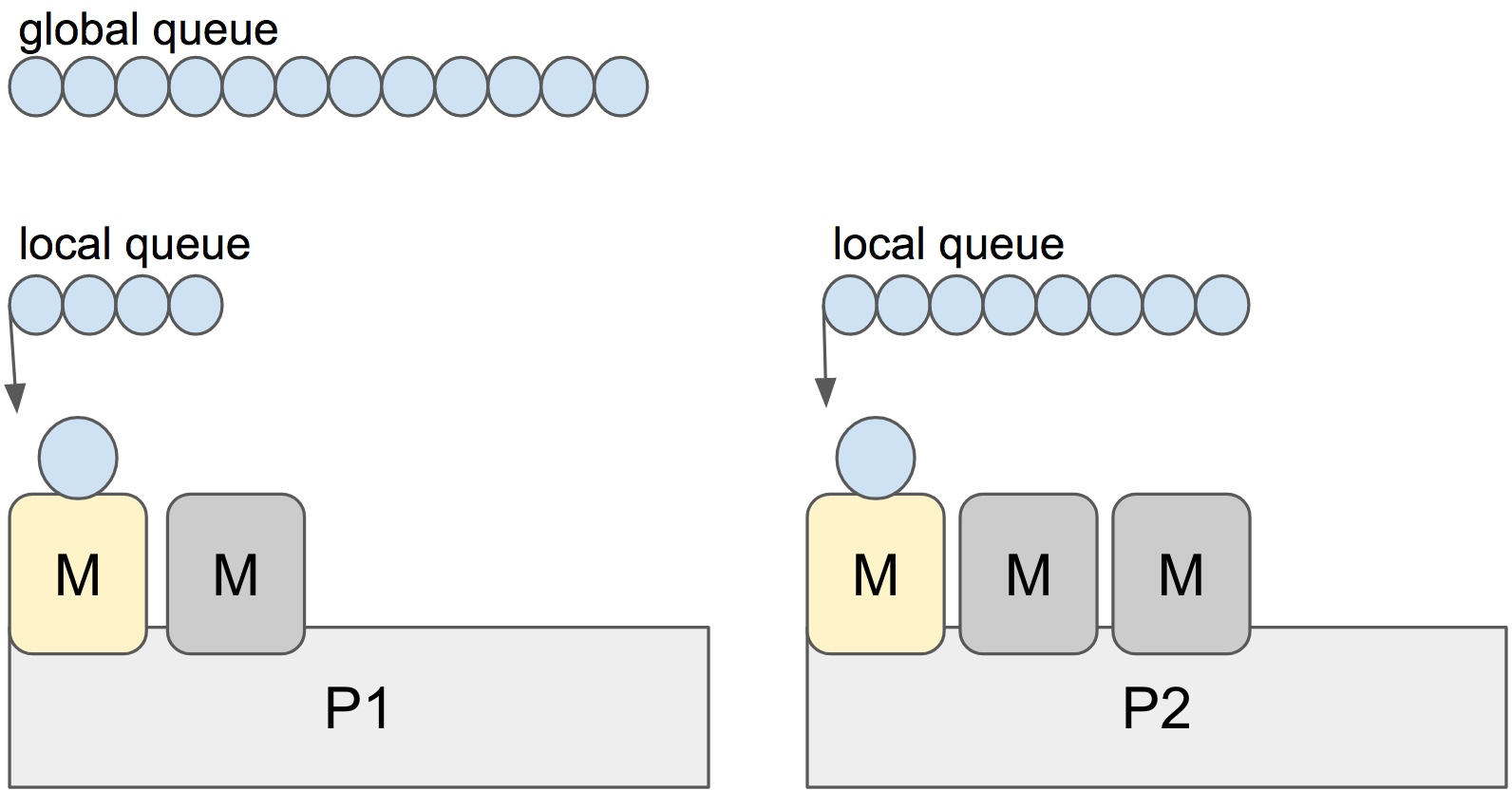

Go scheduler’s job is to distribute runnable goroutines over

multiple worker OS threads that runs on one or more processors.

In multi-threaded computation, two paradigms have emerged in scheduling: work sharing and work stealing.

- Work-sharing: When a processor generates new threads, it attempts to migrate some of them to the other processors with the hopes of them being utilized by the idle/underutilized processors.

- Work-stealing: An underutilized processor actively looks for other processor’s threads and “steal” some.

The migration of threads occurs less frequently with work stealing

than with work sharing. When all processors have work to run, no threads are being migrated. And as soon as there is an idle processor, migration is considered.

Go has a work-stealing scheduler since 1.1, contributed by Dmitry Vyukov. This article will go in depth explaining what work-stealing schedulers are and how Go implements one.

Scheduling basics

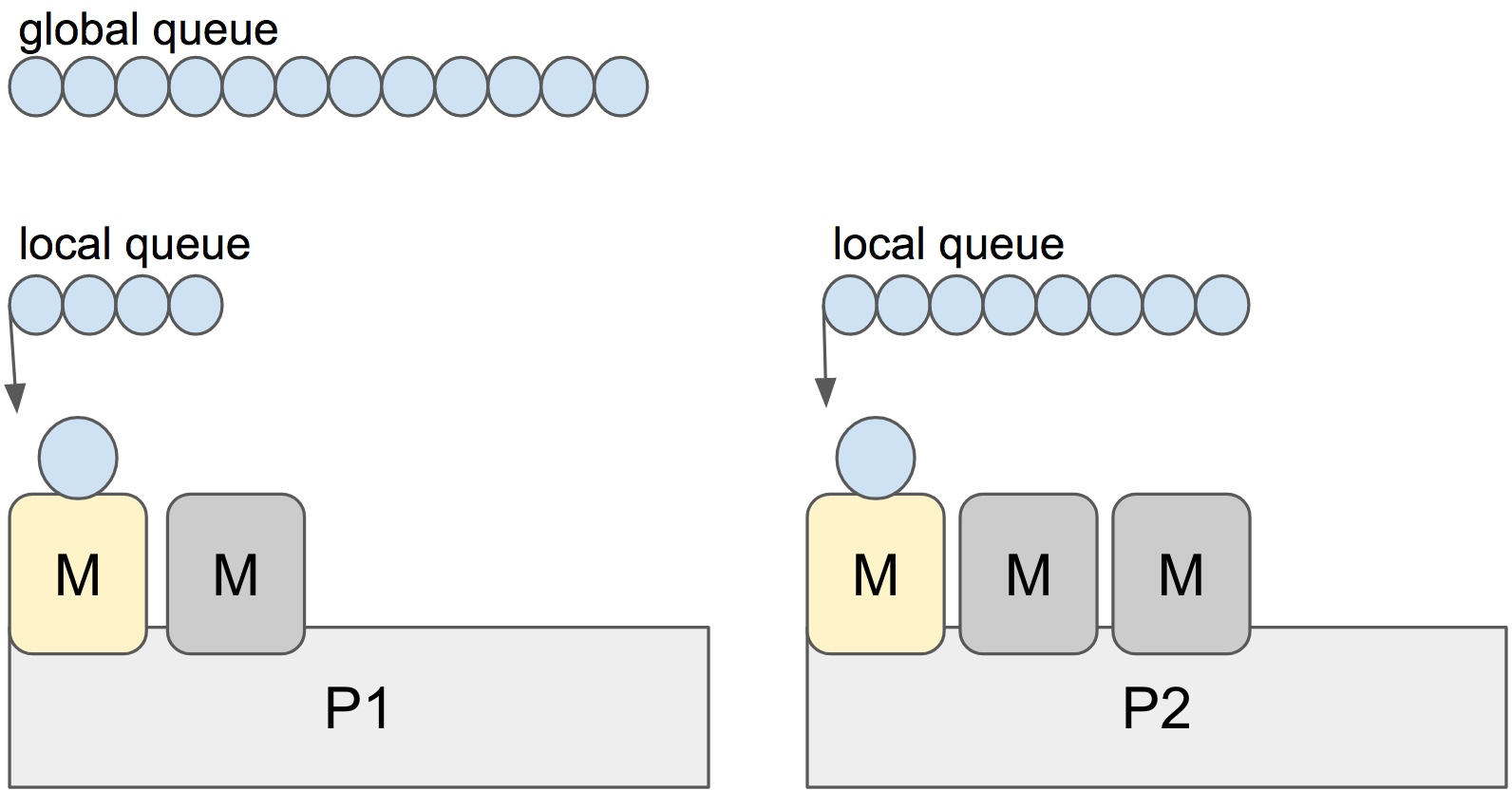

Go has an M:N scheduler that can also utilize multiple processors. At

any time, M goroutines need to be scheduled on N OS threads that runs on at most GOMAXPROCS numbers of processors.

Go scheduler uses the following terminology for goroutines, threads and processors:

- G: goroutine

- M: OS thread (machine)

- P: processor

There is a P-specific local and a global goroutine queue.

Each M should be assigned to a P. Ps may have no Ms if they are blocked or in a system call.

At any time, there are at most GOMAXPROCS number of P. At any time, only one M can run per P.

More Ms can be created by the scheduler if required.

Each round of scheduling is simply finding a runnable goroutine and executing it.

At each round of scheduling, the search happens in the following order:

runtime.schedule() {

// only 1/61 of the time, check the global runnable queue for a G.

// if not found, check the local queue.

// if not found,

// try to steal from other Ps.

// if not, check the global runnable queue.

// if not found, poll network.

}

Once a runnable G is found, it is executed until it is blocked.

Note: It looks like the global queue has an advantage over the local queue

but checking global queue once a while is crucial to avoid M is only scheduling

from the local queue until there are no locally queued goroutines left.

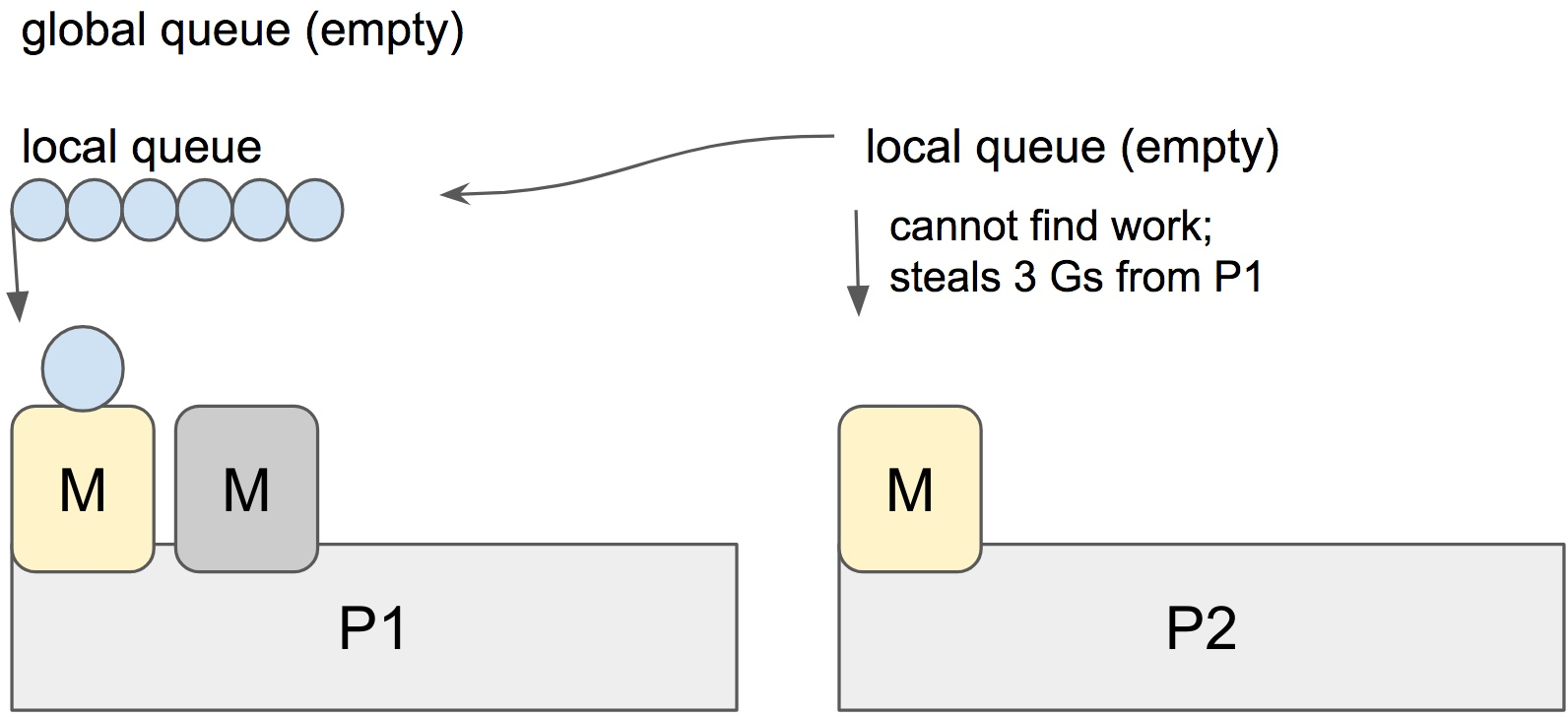

Stealing

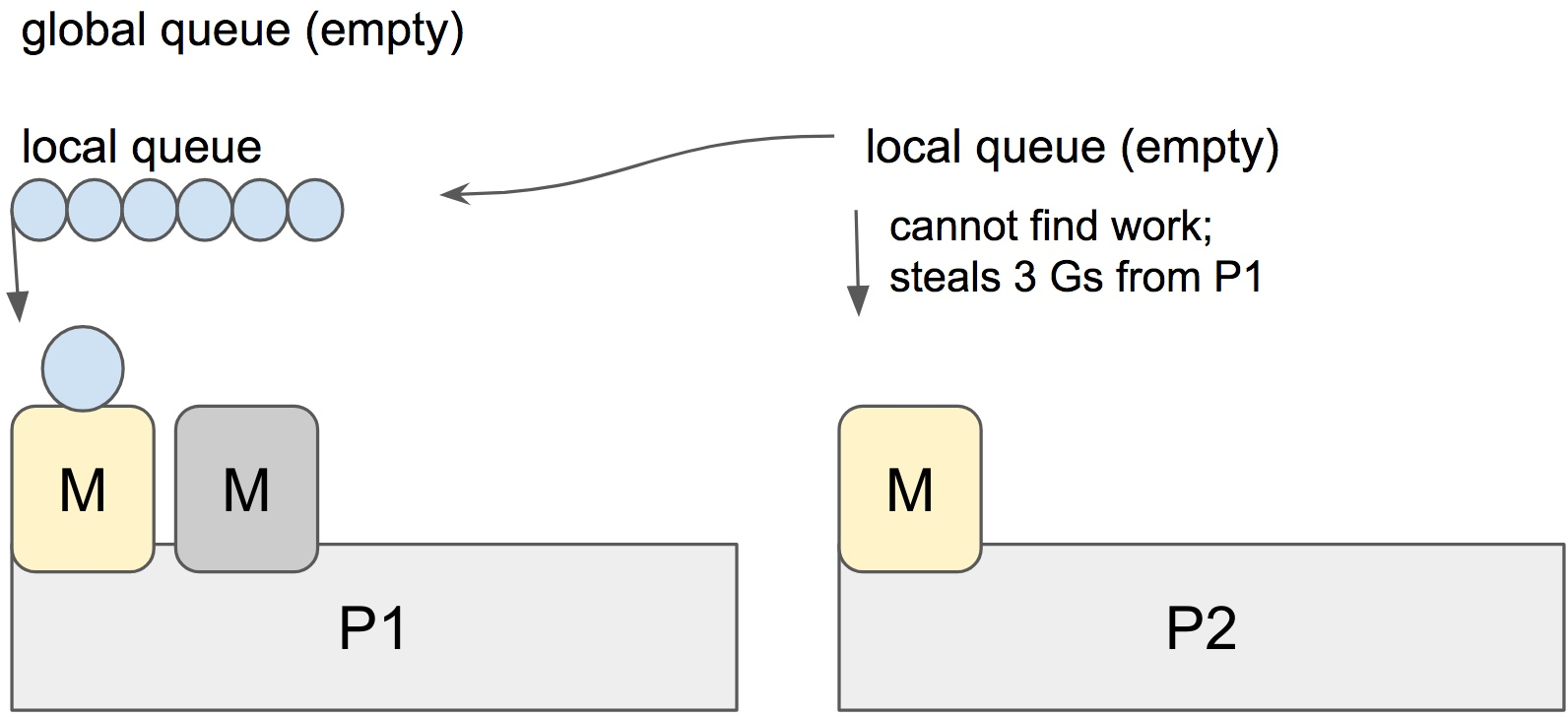

When a new G is created or an existing G becomes runnable, it is pushed onto a list of runnable goroutines of current P. When P finishes executing G, it tries to pop a G from own list of runnable goroutines. If the list is now empty, P chooses a random other processor (P) and tries to steal a half of runnable goroutines from its queue.

In the case above, P2 cannot find any runnable goroutines. Therefore, it randomly picks another processor (P1) and steal three goroutines to its own local queue. P2 will be able to run these goroutines and scheduler work will be more fairly distributed between multiple processors.

Spinning threads

The scheduler always wants to distribute as much as runnable goroutines to Ms to utilize the processors but at the

same time we need to park excessive work to conserve CPU and power. Contradictory to this, scheduler should also need to be able to scale to high-throughput and CPU intense programs.

Constant preemption is both expensive and is a problem for high-throughput programs if the performance is critical. OS threads shouldn’t frequently hand-off runnable goroutines between each other, because it leads to increased latency. Additional to that in the presence of syscalls, OS threads need to be constantly blocked and unblocked. This is costly and adds a lot of overhead.

In order to minimize the hand-off, Go scheduler implements “spinning threads”. Spinning threads consume a little extra CPU power but they minimize the preemption of the OS threads.

A thread is spinning if:

- An M with a P assignment is looking for a runnable goroutine.

- An M without a P assignment is looking for available Ps.

- Scheduler also unparks an additional thread and spins it when it is readying a goroutine if there is an idle P and there are no other spinning threads.

There are at most GOMAXPROCS spinning Ms at any time. When a spinning thread finds work, it takes itself out of spinning state.

Idle threads with a P assignment don’t block if there are idle Ms without a P assignment. When new goroutines are created or an M is being blocked, scheduler ensures that there is at least one spinning M. This ensures that there are no runnable goroutines that can be otherwise running; and avoids excessive M blocking/unblocking.

Conclusion

Go scheduler does a lot to avoid excessive preemption of OS threads by scheduling them to the right and underutilized processors by stealing, as well as implementing “spinning” threads to avoid high occurrence of blocked/unblocked transitions.

Scheduling events can be traced by the execution tracer. You can investigate what’s going on if you happen to believe you have poor processor utilization.

References

Mon, Jul 3, 2017

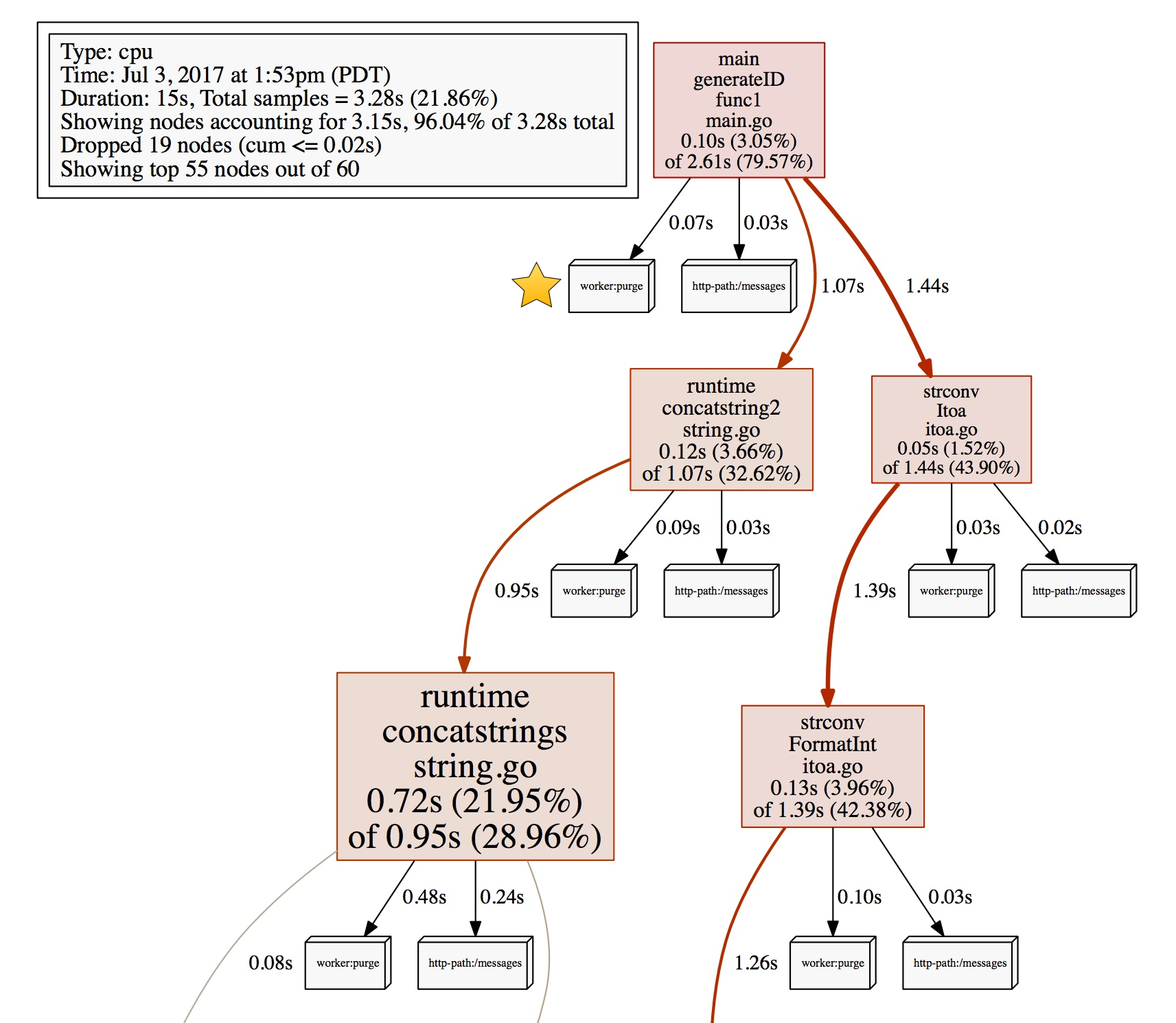

Go 1.9 is introducing profiler labels, a way to add arbitrary key-values to the samples collected by the CPU profiler. CPU profilers collect and output hot spots where the CPU spent most time in when executing. A typical CPU profiler output is primarily reports the location of these spots as function name, source file/line, etc. By looking at the data, you can also examine which parts of the code invoked these spots. You can also filter by invokers to have more granular understanding of certain execution paths.

Even though locality information is useful to spot expensive execution paths, it is not always essentially enough when debugging a performance problem. A significant percentage of Go programmers uses Go to write servers, and it is even more complex to point out performance issues in a server. It is hard to isolate certain execution paths from others, or hard to understand whether it is only a certain path creating trouble (e.g. a user or a specific handler).

With 1.9, Go is introducing a new feature that allows you to record additional information to provide more context about the execution path. You will be able to record any set of labels, as a part of the profiling data. Then, use these labels to examine the profiler output more precisely.

You can benefit from profiler labels in many cases. Some of the obvious ones:

- You don’t want to leak your software abstractions into the examination of the profiling data; e.g. a profiling dashboard of a web server will be useful if it displays handler URL paths, rather than function names from the Go code.

- Execution stack location is not enough to understand the originator of work; e.g. a consumer that reads from a message queue does work originated somewhere else, the consumer can set labels to identify the originator.

- Context-bound information is required to debug profiling problems.

Adding labels

The runtime/pprof package will export several new APIs to let users add labels. Most users will use Do which takes a context, extends it with labels, records these labels when f is executing:

func Do(ctx context.Context, labels LabelSet, f func(context.Context))

Do only set the given label set during the execution of the current goroutine. If you want to start goroutines in f, you can propagate the labels by passing the context argument of the function.

labels := pprof.Labels("worker", "purge")

pprof.Do(ctx, labels, func(ctx context.Context) {

// Do some work...

go update(ctx) // propagates labels in ctx.

})

The work above will be labeled with worker:purge.

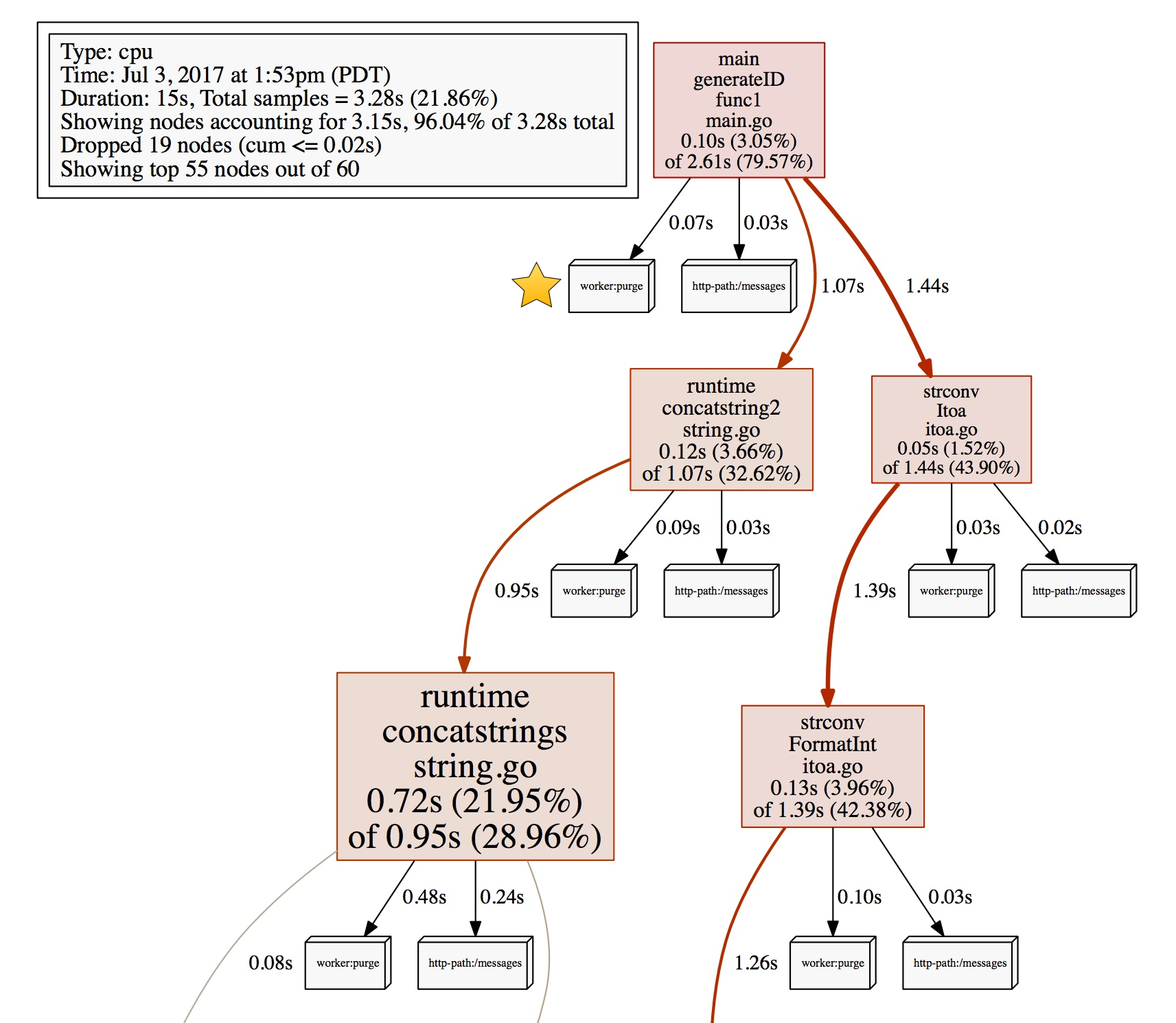

Examining the profiler output

This section will demonstrate how to examine the recorded samples by profiler labels. Once you annotate your code with labels, it is time to profile and consume the profiler data with tag filters.

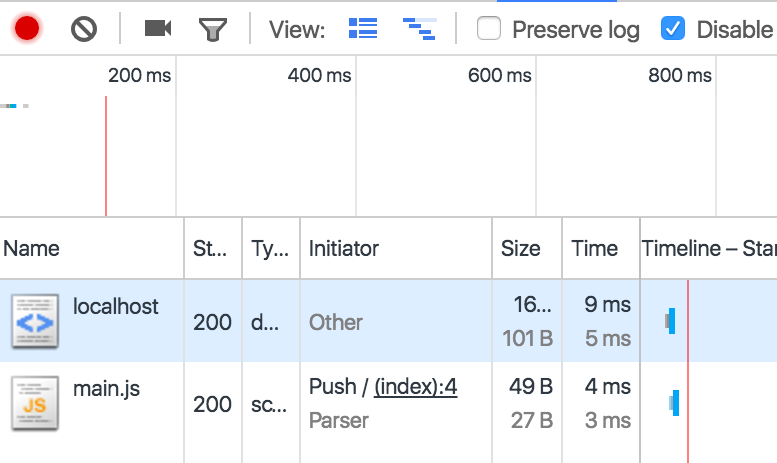

I will use the net/http/pprof package to capture samples in this demo, see the Profiling Go programs article for more options.

package main

import _ "net/http/pprof"

func main() {

// All the other code...

log.Fatal(http.ListenAndServe("localhost:5555", nil))

}

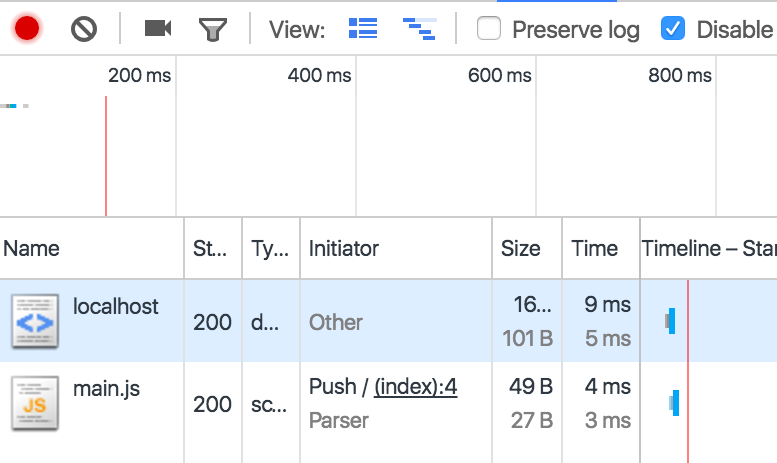

Collect some CPU samples from the server.

$ go tool pprof http://localhost:5555/debug/pprof/profile

Once the interactive mode starts, you can list the recorded labels by the tags command. Note that pprof tools call them tags even though they are named labels in the Go standard library.

(pprof) tags

http-path: Total 80

70 (87.50%): /messages

10 (12.50%): /user

worker: Total 158

158 ( 100%): purge

As you can see, there are two label keys (http-path, worker) and several values recorded for each. http-path key is coming from HTTP handlers I annotated, and worker:purge is originated at the code above.

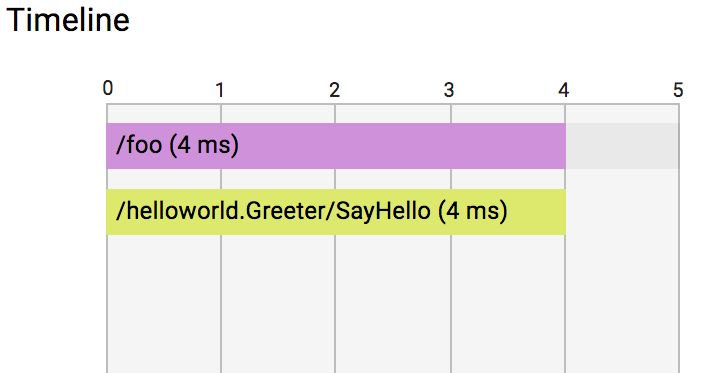

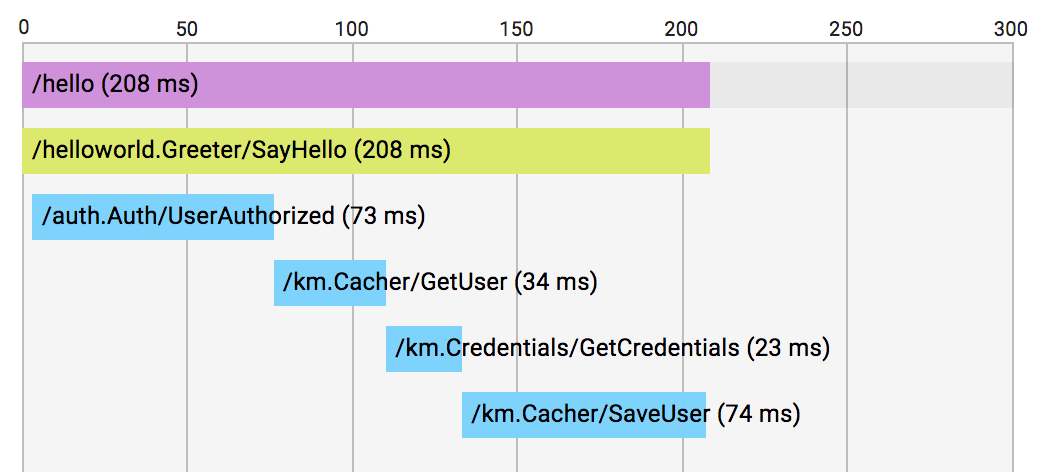

By filtering by labels, we can focus only on the samples collected from the /user handler.

(pprof) tagfocus="http-path:/user"

(pprof) top10 -cum

Showing nodes accounting for 0.10s, 3.05% of 3.28s total

flat flat% sum% cum cum%

0 0% 0% 0.10s 3.05% main.generateID.func1 /Users/jbd/src/hello/main.go

0.01s 0.3% 0.3% 0.08s 2.44% runtime.concatstring2 /Users/jbd/go/src/runtime/string.go

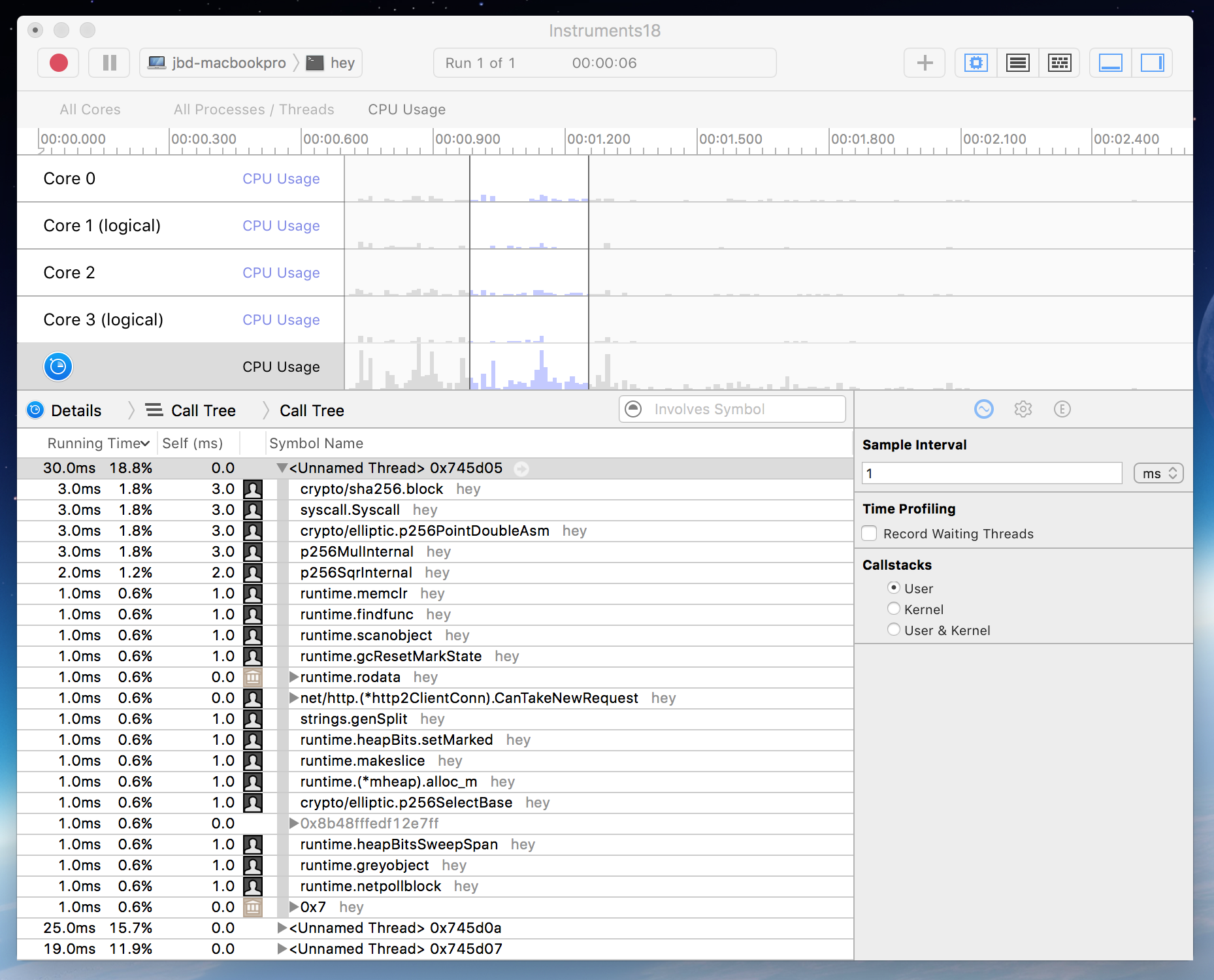

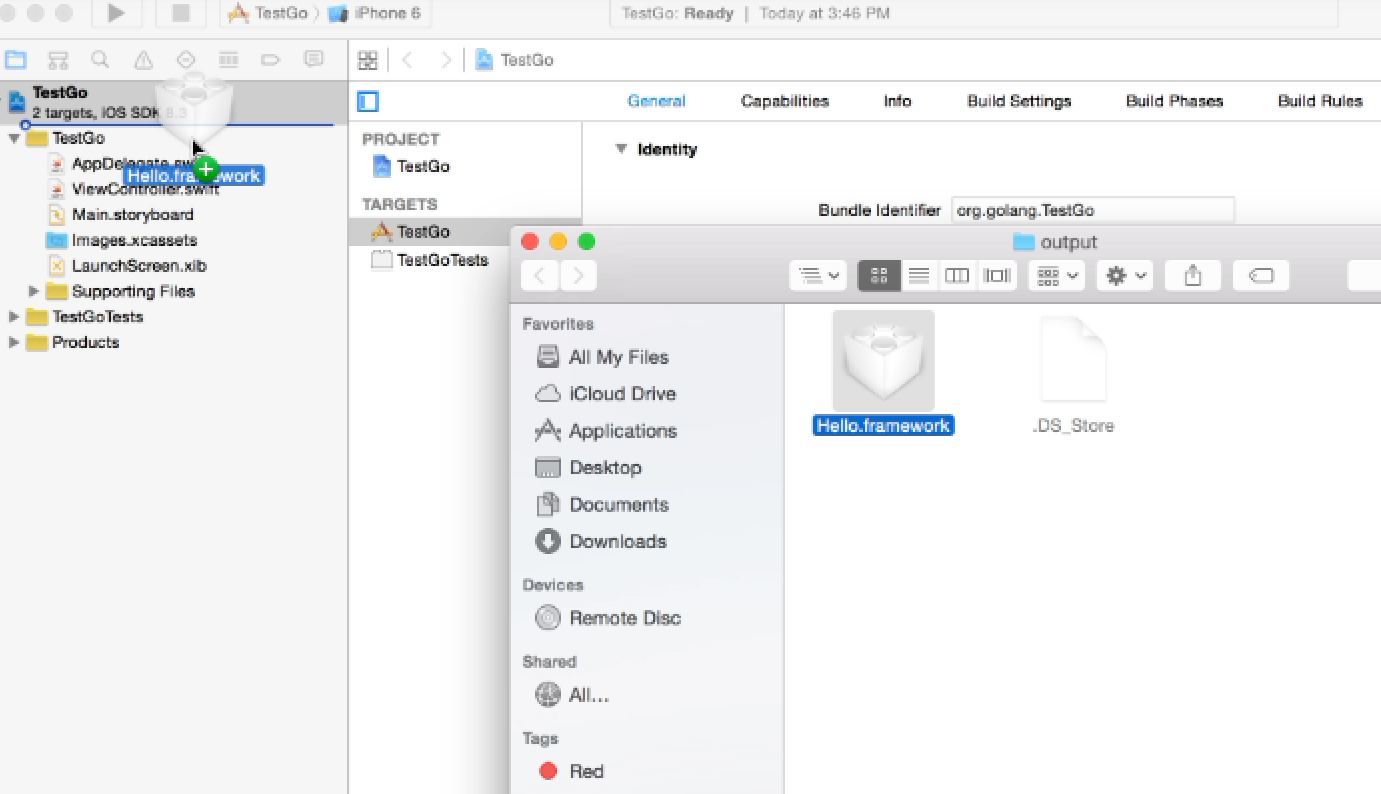

0.06s 1.83% 2.13% 0.07s 2.13% runtime.concatstrings /Users/jbd/go/src/runtime/string.go